By the way, is the current shapekey system based on FACS? It seems like a pretty promising system to base a shape-key expression system around to me.

Currently it’s not, but for my project (https://surafusoft.eu/facsvatar/) I needed them to be FACS compliant, so I wrote a converter function from FACS data to MB’s Shape Keys: https://github.com/NumesSanguis/FACSvatar/tree/master/modules/02_facs-blendshapes/app

They are manually engineered by me, through just looking at the character (took quite some time :P), but that means they are NOT FACS certified, but should give a result close to it.

I’m currently in the process of re-writing the structure of my app, so the project should be more stable around begin of April. The documentation should also be done then. Additionally, I’m planning to add the functionality that you can use the extracted facial data to create Key Frames for facial animation of MB’s characters. I cannot give you a timespan on this though.

Versions:

Blender v2.79

Manuel Bastioni lab 1.61

Answer

It only happens for my shirts on these charcters, not pants.

What is “template model”? I

fitted manually on one character, exported only the shirt and then used proxy fit on the shirt + a new similar character.

How I reproduce it:

- Open an empty blender file

- Initialize and finalize a

f_as01 - Import a shirt that is just a mesh, fit it to the body (no shapekeys)

- Use proxy fit

- Shirt is displaced

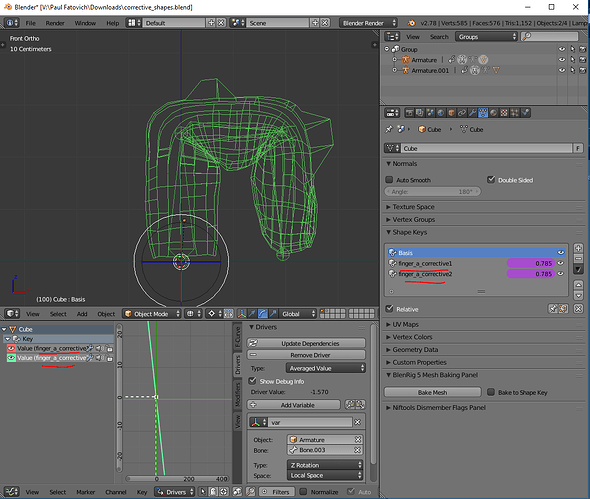

Manuel, regarding bone drivers and muscle morphs you are correct when you say that they are not supported by external engines.

However, I think it is possible to replicate the same behaviour in unreal an or unity by evaluating the current angle between bones to set the morph target / blendshape value. Essentially duplicating the blender bone driver behaviour of blender within the engine using either a script or within a blueprint.

Also, if the animation is done within blender the bone driver shapekey results can be “baked” into the animation.

An advantage of using bone driver with shape key method is that if the external engine is not set up to handle the bone driver the model’s animation will still work as per default with standard weighted bone animation (corrective morph targets always at 0).

In theory this could be done with the current system’s bendy bones muscles by creating a script that generates corrective blend shapes based on bone angles. This is what the XMuscle plugin author suggests in this video exporting to Unreal and Unity Engines with Shape Keys

I am going to experiment with this and see if it is feasible.

Either way MLab is an excellent plugin. Great Work!

I want to add another nuance to trying to make specific motion capture systems work with your addon. Motion capture system have an image (at least to me) of being complex, hardware advanced systems, which may be not too many out there. However, with the advances of Deep Learning, precise facial expression tracking probably becomes less of a hardware problem, but more of a software problem. Once the requirements of such software becomes only needing a depth camera, or even a simple webcam / phone camera, you’ll get an explosion of research groups / companies focusing on facial tracking. Just pulling random numbers here, but while now new advanced hardware is released every 3? years (and compatible with older versions), this might become months instead (and incompatible). This process has actually already started: http://ieeexplore.ieee.org/abstract/document/8225635/ (review of 300 papers about Face Feature Extraction).

Also, by using an intermediate representation such as FACS, you’re addon will become indespensible to acadamic research fields such as: Affective Computing, Social Psychology, Neuroscience and other fields that deal with the affective state of people or facial muscles. Also, the software type of facial extraction often outputs FACS AU values.

Paper: Automatic Analysis of Facial Actions: A Survey (non-member link to .pdf: http://eprints.nottingham.ac.uk/44740/1/taffc-valstar-2731763-proof%20(2).pdf)

Some quotesfrom that paper:

“As one of the most comprehensive and objective ways to describe facial expressions, the Facial Action Coding System (FACS) has recently received significant attention. Over the past 30 years, extensive research has been conducted by psychologists and neuroscientists on various aspects of facial expression analysis using FACS.”

“The FACS is comprehensive and objective, as opposed to message-judgement approaches. Since any facial expression results from the activation of a set of facial muscles, every possible facial expression can be comprehensively described as a combination of AUs”.

Are you planning to use your muscle system for facial expressions?, because that seems to really match FACS and will probably give impressive results. If you use that system, you could bake the facial expressions as Shape Keys. This would give people the option to make their own facial expressions by contracting/relaxing muscles (although limited to Blender), but by then baking those, you achieve easy portability.

Fun fact, there is even FACS for horses (EquiFACS): http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0131738

No, the current shapekey system is based on morphs more frequently used in animation.

Thank you. But this would require a special script from the side of the external engine, right?

The template model is the model used to “calibrate” the proxy. In other words, is the reference model to use for the first manual fitting. If you have not used the template, the proxy will wrongly displace. Take a look here, “Step 1. Prepare the proxy tailored for a base template”:http://www.manuelbastioni.com/guide_a_detailed_tutorial_about_clothes.php#prepare_proxy

Please tell me if this was the problem.

Using the rigging instead of shapekeys, it will be possible to save each expression as a regular pose file. Then if I successfully write an algorithm to mix the poses, it will be easy for the final user to create his personal library of expression ‘elements’, to load and mix (and export as shapekeys

- ) as he needs.

In other words, every user will be able to create the “intermediate layer” that best matches his needings.

On the other hand, if the user prefers to use a direct capture system, he has just to set a link between the captured feature points and the facial bones.

The expression as pose will be also highly portable between all the lab characters, even in future versions (my intention is to design a stable enough set of facial bones). You can already see that it’s still possible to load a body pose created with very old versions of the lab: I’d like to do the same for expressions.

Are you planning to use your muscle system for facial expressions?, because that seems to really match FACS and will probably give impressive results. If you use that system, you could bake the facial expressions as Shape Keys. This would give people the option to make their own facial expressions by contracting/relaxing muscles (although limited to Blender), but by then baking those, you achieve easy portability.

I’m studying the best way to simulate muscles and skin wrinkles, but I will not use the muscle based on bendy bones.

- I’m planning to add this feature too.

As for skin wrinkles, I personally think it should be shader-based, rather than geometry-based. There are a number of papers out there for how skin micro-texture changes when it’s stretched, and if you use something like the tension map script, but with something indicating, for example, the UV-coordinate direction of the stretch, then you could very effectively drive wrinkle bump-maps.

The downside to this approach is, of course, you need a script to drive the wrinkles. You could also drive it directly from the bones, but that seems unnecessarily difficult.

Wrinkle maps required for full detail would probably consist of: scrunch U, stretch U, scrunch V, stretch V

By the way, are you familiar with the [undertone + darkness] 2-axis skin-tone system? It’s used a lot in makeup.

It seems like an interesting flexible and intuitive skin-tone description system. Right now, it seems like you only have one of those 2 axes supported (darkness only).

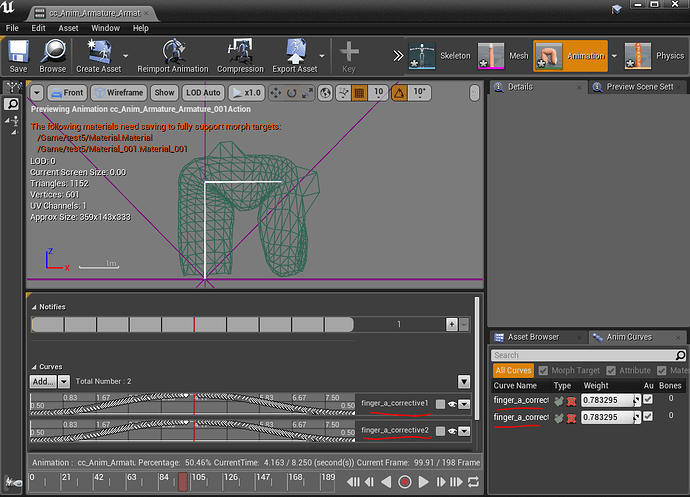

The special script on the external engine is not needed if the animations are exported from blender.

Blender can bake the bone driven keys during the fbx export and the corrective blendshapes will automatically be set when playing back the animation.

I created a simple animation with exaggerated bone driven shape keys in blender and exported to fbx. Then I imported the fbx into unreal engine, no other setup was needed.

The two screenshots below show the exaggerated deform shapekey animation working in both blender and ue4.

Hey Manuel. This is a wonderful add-on! I’ve been using it for a while. As far as I understand, the main focus with this add-on is high quality and close-up detail. I was wondering if for future versions you could consider adding an option of using LOD versions of the models, if people want to increase performance and use the humanoids to populate a larger scene? Thanks.

Facerig with corrective shapekeys will be better i think

I’m thinking to split the problem in two parts, that will be implemented in two successive releases:

-

Macro wrinkles: geometry-based. I’m working on a new topology that will be able to reproduce the main wrinkles.

-

Detail wrinkles: Texture-based. I’m still evaluating the best way to implement it. I don’t like to create dependencies with scripts that are not officially part of Blender built-in set. On the other hand, I don’t like to reinvent the wheel…

Thank you.

Thank you Pavs!!

This is a very interesting thing. Have you used particular fbx setting for the export?

I’m planning to add low poly models (for humans only) in lab 1.7. Later I will add low poly for anime and fantasy.

Hi Manuel,

first thing, your work is amazing

Second thing: i’m a sort of noob with Blender so pardon me if my question sound stupid or irrispectful. I dont know the technical aspect about armature/animation.

My question is:

if I wanna use a generated model from Lab and use it as a “base structure” for some 3D sculpting, which steps I must follow to avoid funky behaviour of any sort? And if I pose the model just before deciding to sculpt it, how can I retain that same pose?

(let me be more precise as possible: generate a body after doodling with parameters -> as a facultative step I give it a pose (or not) -> would like to “extract” that model (posed or not) and sculpt it -> how?)

I can verify those aren’t supported in Unity either. I don’t even generate them to begin with to avoid confusion.

Also I’m pretty sure “bone driven shape keys” wouldn’t work in Unity either. At least, I’ve never heard of such a thing.

I am fairly new to ue4 and am still working out the best workflow to get assets from blender to ue4, especially the mlab characters.

The only setting that I am sure needs to be unchecked is the Apply Modifiers in the Geometries panel.

Also I check everything in the Animation panel but not sure if that is strictly needed.

Blender units by default are 100x smaller than ue4 so I always scale everything by 100 and apply. I have read that the fbx export should take care of this automatically by setting the scale param but I have not had success with this when it comes to armatures.

Here is a screenshot of the main fbx export settings I use in blender.

When importing into ue4 make sure the Import Morph targets is checked, it is in the advanced mesh section of the fbx import window.

I have attached the blend file and fbx export in case you want to experiment (without the exaggerated corrective blend). The blend file I got from this youtube video.

corrective_shapes.zip (221 KB)

volfin2 you are correct “bone driven shape keys” would need to be set with an special animator controller script that reads the angle betwen bones but unity does support corrective blend shapes just like ue4 does.

Here is a screenshot of the same fbx file I imported into ue4 now in unity. Notice the bottom 2 animation tracks. Those are the blendshape keys.

After you find an efficient workflow for this process, would you mind creating an UE4 page here: http://manuelbastionilab.wikia.com/wiki/Manuel_Bastioni_Lab_Wiki and write down the steps you took? That would save other users from having to figure everything out again, and other users can contribute tips to improve your workflow even more ![]()

This was indeed the problem! Thank you for your help.