I apologize for the length of this post. It’s longer than I’d prefer, but given the folks involved, I’m sure that the shreds of what I’ve reiterated elsewhere would probably gain from being collected specifically here, with the hope that some folks reading might be able to elevate their control over otherwise slippery software / application interfaces / nightmare confusion.

Bear in mind that the idea of “middle grey” is the connection between the radiometric-like quantities of light and some sort of “fully adapted” thing. That is, if we stare at a display with a block on the right that is emitting 100% sRGB R=G=B light, and a block on the left emitting 0%, 0%, 0% light, a value in the “middle of the two” is approximately 18-20% emission in radiometric terms from the display.

That is, it’s an anchor that is the only viable one, as the upper and lower ranges within the scene can vary tremendously.

Takeaways:

- Displays output radiometric light ratios. Always.

- “Middle grey” is a photometric evaluation of light. That is, fully adapted, it “appears” halfway between the maximum and minimum adapted values, but in radiometric terms, the display would be outputting 18-20% emission.

- “Middle grey” is not 50% of the display’s emission for this reason, and 50% radiometric albedo is radically different than "halfway light between the maximum and minimum output of you display.

Phew. Moving along…

Mixing pixels depends on the pixels around them. If you mix a very high intensity with a very low intensity, the result will be somewhere between them, but relative to other pixels around that result, the contrast may increase or decrease depending on what the value is around it! More on that below.

Nuanced observation! This is likely due to gamut mapping mishandling from a camera. More on that below. Your willingness to “hold back” is absolutely wise, as hopefully I can clarify below.

Remember, I described it back in 2016 as a “desaturation” as that was language that at the time made the most sense in my mind. It has always been a gamut mapping approach. The Filmic in Blender is not “just a tone map”, but actually a gamut map. More on that below.

This is a fundamental element of the RGB encoding model. The opposite mixtures of any of the primaries will pull the value towards the achromatic axis in a well defined RGB colour space, of which sRGB is one. That means that mixing purely saturated sRGB green and blue will pull the sRGB red value directly toward the achromatic point.

Believe it or not these sorts of issues have been around forever. For example, in the oldschool bleach bypass processing, the result was exceptionally contrasty, and as a result, when lighting, people had to hold back and account for such. Same applies in the opposite direction with things that decrease contrast.

Performing grading on scene referred ratios is mandatory to emulate how light transport would behave. There are some exceptionally rare edge cases where display linear manipulations are required. I can expand on this if anyone is at all interested, as it’s probably another long-ish exploration.

See above. Keep a clear distinction between the photometric domain of what we process in our magical psychophysical systems and the radiometric domain that is the stimulation of that process.

It’s actually a gamut question ultimately.

It’s wise to evaluate what the RGB in practical terms. For example, an sRGB-like device is useful.

If we fire three equal energy sRGB lights onto an albedo, and the albedo is 0%, 0%, 100%, the blue channel will reflect back what the energy is. But what happens when that energy escapes the gamut volume of the output device? Answer - it just sits there as the most intense blue. So there’s a cognitive “reading” of imagery involved; the blue just sitting at the exact same display referred output intensity because that’s the limit of the output gamut volume.

Likewise, if we have a ratio of lights, such as 0%, 50%, and 100%, now we have another gamut volume problem. As we increase the intensity of the three equal energy sRGB lights we fire into the scene, what happens when our input energy exceeds the display’s gamut volume? The answer is that our ratios skew; we lose the intention of the original scene values, and eventually the complete wrong mixture results where the sRGB display outputs 0%, 100%, 100% emission, utterly detached from the original albedo ratios of 0%, 50%, 100% reflectance. Our tealy-blue abedo here has skewed into a nasty cyan!

So gamut mapping, in the most clearest of terms is not an optional thing. The difference in volumes must be accounted for, and the output value transformed in an attempt to maintain the intention of the scene’s radiometric-like ratios. Do you expect to see the cyan or something closer to the intention in the albedo value? What about heavily saturated and hugely intense light sources? Imagine a hugely bright red or green or blue or magenta light beam. Would we expect that light beam to remain “stuck” at full intensity on the display or skew wildly or something else? I can’t believe I am about to do this, but in the interest of gamut mapping logic…

In order to hit your target, you’ll need to define what those meaningless words mean, in practical pixel concepts, as a starting point. What does “crispy” mean? Contrast? If so, think about what contrast means.

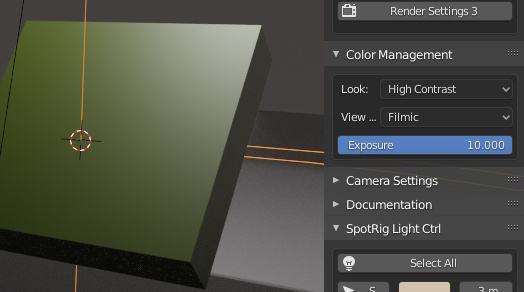

In the simplest case, there are two things that are readily controllable:

- Exposure

- Contrast

In the radiometric-like domain, exposure is a multiplication of intensities, so the values move away from or towards zero. Contrast on the other hand, in the most simplest case, can be described as pushing values away from or toward each other.

In both cases, as we push values away or toward, the overall intensities may collapse well under or well over the display / output gamut volume. This means that both exposure and contrast come with trade-offs; we can’t expect to keep the values in the gamut volume of the output device if we increase them, and if we collapse them too far we can also derive some image problems.

Phew. Ok enough for now. Hope that someone has pulled something useful out of this huge spew of bullshit.