For you. I have scenes that cannot fit in GPU VRAM that renders order of magnitude faster and cleaner because of PG.

Repeating it is of no benefit doesn’t make it true.

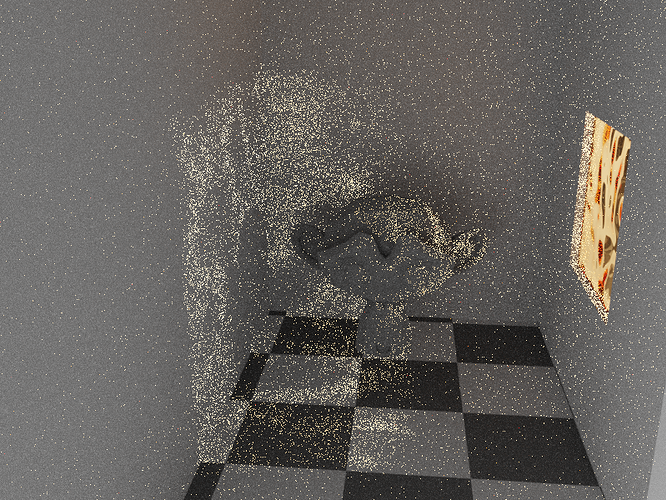

Take a look at these renders I did. Then look at renders others have done both in the posts above and below. Path guiding has very clear benefits - in the right scenes (your scene just happens not to be one of them).

OK, I’ll take that as a case scenario. I tend to work to get my scenes on GPU VRAM, but if I didn’t have to worry about that, then I agree.

BUT, it makes for future artists getting lazy. We know how to make it work on GPU (and bear in mind, I’m limited to 8Gb VRAM), but future generations won’t have those limitations to work within. Rather, their scenes will just get more complex to fill the available RAM and render speed.

Reminds me of my programming days. We used to work to optimise performane. As performance improves, we work to optimise easy.

I’m not talking about MLS, though. I’m looking specifically at PG. Your examples are fine examples of why MLS is worthwhile.

EDIT: Would you be prepared to re-run your tests without MLS?

Neither am I - it says in the captions above each of the 4 images I uploaded.

The first and third images don’t have MLS enabled. Neither do many of the images from myself and other contributors in the posts above and below.

Some other examples

I really wish y’all would stop trying to rush things. Massive features being worked on by often a single person need time to be done right or we’ll be complaining about bugs, or worse, bad decisions, for the next 4 years or longer.

I need a breakdown of what’s happening here.

Are you suggesting we should all stop wasting our time doing PG test renders and discussing the results? Why? Do you think blender should stop trying to integrate the library and the people working on the library should also stop?

There is soon going to be a test build that adds guiding support for glossy rays (see this week’s meeting nodes for rendering).

One of the key points here is having Cycles work smarter and not harder. In some cases yes, brute forcing with a good GPU might still be faster, but then again, I have noticed an exponential speedup in the rendering of certain lighting effects within various scenes (which even a good GPU can’t brute force past in the same amount of time).

Finally, OpenPGL is a relative infant as far as libraries goes (not even to version 1.0), so just give it some time. OIDN for instance was not that great in many cases early on either, but what it can do now does not even compare to when it was first implemented in Blender.

This is the key point I made earlier.

PG is not a magic bullet whereby turning it on = less noise/faster renders in all situations. It’s another tool in the box to be used in the right context. Cycles already has many of these.

For some scenes it’ll make a dramatic difference, and even make previously impossible scenes possible.

For other scenes, the results could be worse or slower.

As the function is developed more - hopefully more and more scenes/scenarios will fall into the former category and fewer into the latter.

Limited application as long as you setup scene with bottles and glasses. For the other 95% of the scene, that have plenty of diffuse component PG is already effective

But my second comparison was largely diffuse. Certainly no glass or transparency.

And I agree with you. I was merely commenting on the current state.

Path guiding is useful for indirect light scenarios. Those scenarios make up subset of all the scenes you could render. Your tests were in direct light.

But still lit by direct light. As already stated, path guiding helps best with indirect lighting scenarios.

For scenes lit exclusively with direct light - path guiding may actually hinder performance.

Here is a scene lit by two lights. The red light is around a corner and thus only contributing indirect light. The green light is lighting the wall directly, but the monkey only indirectly via a bounce off the wall. This is a perfectly reasonable lighting situation for a real scene.

These are rendered for 1 minute each. As you can see - even though PG only uses CPU currently - it still edges out the brute force GPU which was able to render many more samples in the same time. Once PG lands on GPU and gains glossy bounces, it’ll be an absolute game changer.

PG Off (CPU)

PG Off (GPU)

PG On (CPU)

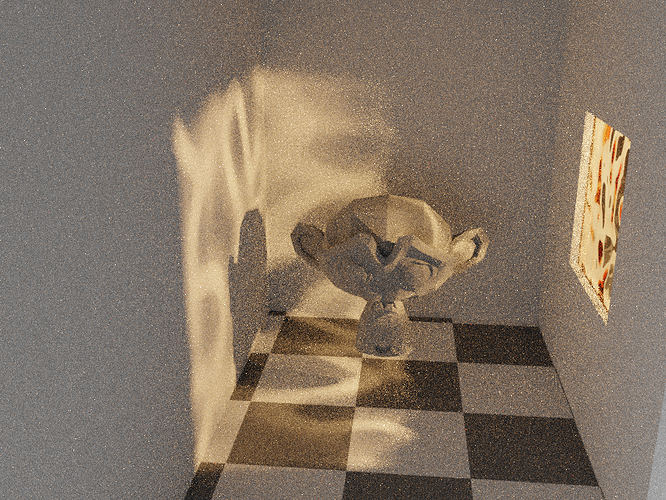

Another couple of tests - this time with a bumpy glass window. Equal time render (60 seconds)

PG Off (GPU)

PG On (CPU)

This type of scene is pretty much impossible without path guiding even using brute force GPU due to the refraction effects of the glass. You can cheat it using the light path tricks, but you lose some or all of the refractive effects in the process.

With adaptive sampling, you can in fact get clean caustics of that type if you give the engine enough time and enough samples. The catch is that you are looking at a rendertime of more than a day on CPU combined with over a million samples. I have had scenes where effects needing a million samples converged to a similar quality with just 500 through Path Guiding, which almost gives the impression of actually having the holy grail of rendering algorithms now.

If that is not enough, OpenPGL can now crack open even more difficult lighting situations in the next release because of MLS (as the algorithms are compatible).

That’s why I said “pretty much impossible” ![]()

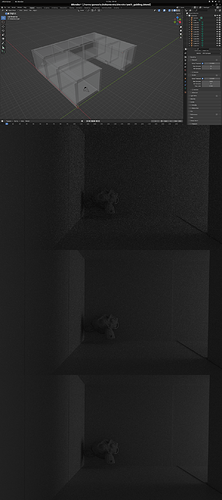

All renderings set to one minute.

From top to bottom:

- Setup

- CPU No path guiding (Threadripper 12/24 Core)

- CPU path guiding

- GPU (RTX 3060)

Scene:

https://drive.google.com/file/d/1ZXajMCoGsYo5I5jGfq0UHGS6xvv4rjP7/view?usp=share_link

Verdict (for this scene): Path guiding is much better, brute force GPU still better.

This is similar to my first test above. With a more powerful GPU (I have a 980ti), it’s possible the GPU render could have edged out the CPU+PG one.

However for my second test - no amount of GPU samples could get me close to the quality of CPU+PG (and I tried up to about 500,000).