ONE MORE UPDATE: There is now a graphicall daily build available for Windows which will contain my latest progress in converting Cycles into a spectral engine.

https://blender.community/c/graphicall/nkbbbc/

ANOTHER UPDATE:

Over on this devtalk topic I’ve been working on modifying Cycles to be a spectral renderer from the ground up. Progress is slow since I’m unfamiliar with the Cycles code but it seems achievable.

UPDATE:

Since the start of this thread, significant progress and changes of technique has occurred, including moving the entire workflow inside of Blender, and using correct models for doing the conversion to RGB. A lot of discussion happened regarding colour spaces and accurate conversion to RGB from spectral images, which is the foundation of this workflow.

There is still significant overhead since Blender decides to re-build the BVH between each render layer even though the geometery is identical, but I’m hoping to get to the point where anyone can use this process in their workflow without too much extra work.

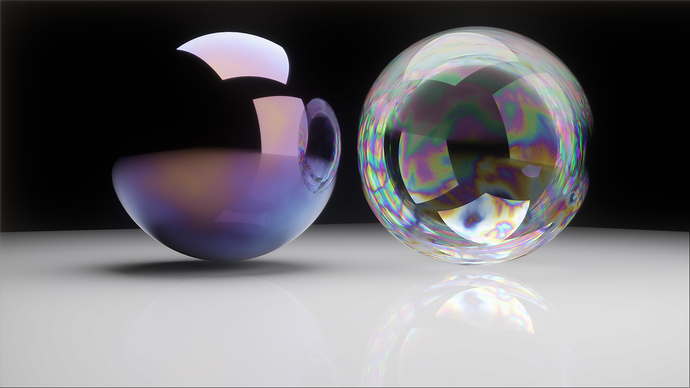

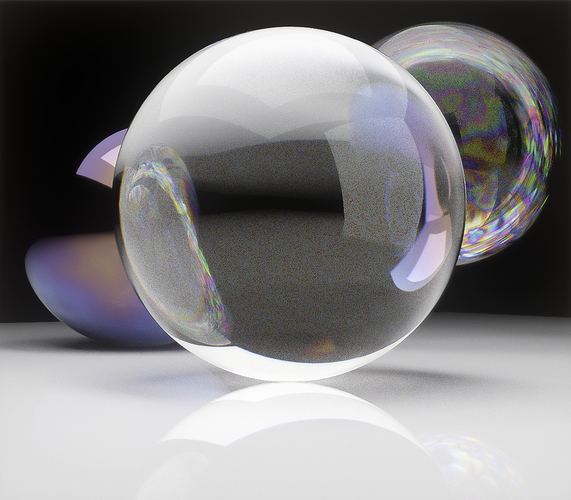

Here is an example image created with the latest iteration of the workflow:

This is a continuation of my previous thread which has since disappeared.

Here are some tests I’m doing with Cycles and a Spectral Rendering process I devised. While it is only marginally slower than standard rendering, the biggest drawback/struggle is actually getting spectral data to use for materials.

This shows a bubble using thin film interference, and a steel ball which has been heated, causing an oxide layer of varying thickness. Both of these are quite hard to accurately create in RGB, but come as second nature in spectral rendering.

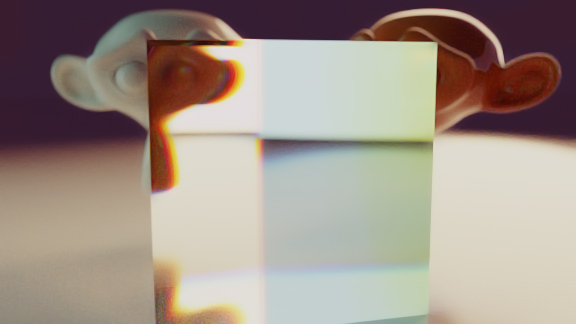

This was the first test I did as a proof of concept, showing two different light sources (one blackbody and one fluorescent), in-camera chromatic aberration, and dispersion in glass. All of these are very easy to create in this workflow.

I’m yet to do a full scene because I haven’t found a way to extrapolate standard RGB colour data into a usable spectrum yet, but using black and white textures works just fine. Having a proper understanding of light does help with this as well, but it should be simple enough such that anyone could learn it in half an hour or so.