yes I believe that will be the case as stated ‘‘In future patches, the number of channels and data type will depend on the CMake configuration where spectral rendering will be enabled or disabled’’.

To me, this sounds like a compile-time option rather than a runtime one. But I guess we’ll have to wait and see.

If it were a compiler option, people could of course build their own Blender with non-spectral Cycles anytime.

greetings, Kologe

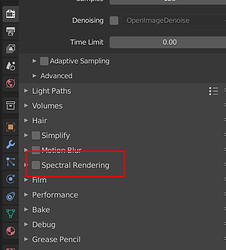

Yes it does. The spectral Cycles X branch that you can download now has the switch to turn on and off.

Though they did it by duplicating the kernels to have RGB and Spectral kernels side by side instead of having one single Cycles kernel. There is a possibility that they might go a different way this time. Personally I am fine with having two kernels, let’s see how it goes.

Is that available only for windows? I’m not finding the download.

Just asked Pembem:

The only difference is that you need two compiled kernels, instead of one, with spectral rendering enabled or disabled, which should be straightforward to implement with this patch. So it’s not a problem to add it in the future. The only concern I have is increasing by almost 30% the size of Blender installation.

Yeah so the switch will be there if people are fine with some increase in installation size.

It was on Graphic All, note the Cycles X version was very buggy, and it does not have the spectral nodes support.

This is the Cycles X version:

Here is the 2.93 version with less bugs (note it’s also buggy though, just not as buggy) and the spectral nodes support:

This a Windows only release, right?

Yes it’s windows

Brecht just said this:

The current build times and size of kernels are already problematic, we’re not likely to double that for one feature

The installation size might be the problem to prevent the runtime switch.

I don’t see why. My current Blender install is around 1GB. We now have multi TB drives, we install games that are like 50GB+, render out animations and create video files that are GB’s in size.

Just the download for DaVinci Resolve is 2.5GB, so a Blender install of 1.5GB is really no big deal.

The biggest issue is not on disk space, it’s on downloading and hosting.

The bigger it is, the more it cost the blender foundation on hosting, and the more difficult it becomes for people with limited bandwidth to download … and of course the bigger the carbon footprint as will

I am quite sure that Blender is going to become more modular in the future, though that is going to take quite a while. Having a basic version and just add modules like Cycles or Cycles Spectral as needed, or add fluid simulation, … will start to make more and more sense from my point of view.

I always wondered why Blender wouldn’t use P2P tech to ease the bandwidth load. It’s money saved. They could use it at least for major releases. Not sure how it would work for daily builds though.

Interesting question. Most of the Linux distros have torrent mirrors, and Linux install image can be way over 200MB.

I don’t know why myself, but maybe it is due to these reasons:

1- some users believe anything from p2p network is a virus fest and a lot of network admin outright ban it is use from their networks.

2- the uploading bandwidth of p2p connection will eat a user quota. Which is a big problem if you have a limited quota like we do here in Egypt… mine is 140 Gigs

There is also loading time, would you really want Blender to be like the big guns and take over a minute to load? Why not also have Blender use 16 gigabytes of RAM in an empty scene while we’re at it, give it that authentic heavyweight professional app. feel?

In addition, unless you want to go back to slow disk drives for your apps, keep in mind that we are not yet to the point where SSD memory is growing on trees (as far as price per gig is concerned).

I would say this is comparable to the switch from rasterization to pathtracing. People may not need spectral, but rendering evolves ever closer to reality and there’s no reason to think npr won’t be possible once we get there.

P2P doesn’t have to be exclusive - but rather supplementary. It would certainly lighten the load on the BF servers.

It’s the equivalent of 30% more traffic, 30% more hosting costs. (Probably not exactly, as the prices probably increase sublinearly, but still)

That’s money that then can’t go to other parts of the operation. Fewer devs, fewer artists. It could actually be quite a big deal, slowing down bug handling and feature expansion.

I really hope that’s not the case though. It’d extremely suck if that were the one thing stopping Cycles from getting spectral support.

I think it’d potentially allow new npr effects. But manipulating the three color channels completely independently, as probably is assumed to be possible for a lot of npr workflows, isn’t gonna work with spectral rendering.

Hosting costs? the servers are sponsored by dell, bandwidth by xs4alll (source). The bandwidth is not limitless though so there is a cost (as things would slow down if that link gets saturated) but it’s not monetary in nature.

That’s good then… Not entirely sure where the problem lies in that case. But Brecht said even the current Kernel size is already problematic somehow…