It looks like it might be possible then to use it looped back as a texture. The PyGPU API is bit of a mystery to me, but overall streaming textures via python in shader with combination with it sounds feasible. Not sure how practical it would be from performance side. (Found a thread with what seems to be a render to texture with python api Live Scene Capture to Texture)

There’s also the realtime compositor in newer Blender versions that could generate the mask itself, if only the mask is needed outside of the material. Although current Eevee renders composition passes

separately. The rewrite should have the passes available at the same time.

Overall it’s bit frustrating having this limitation with material shaders, as multiple game engine techniques aren’t possible with Eevee due to it (z feathered alpha for example).

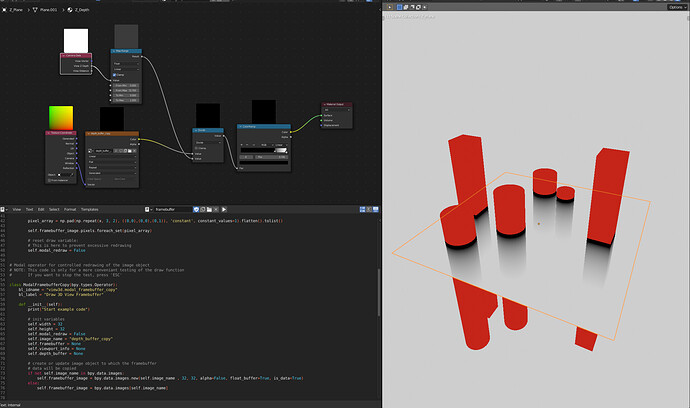

Edit: Tried the script from your link. The depth from there still includes alpha blend surfaces, but enabling “show backface” gives the depth pass without that surface.

Edit 2:

Range is random between the 2 depth buffers, but the idea works. Performance isn’t really interactive though.

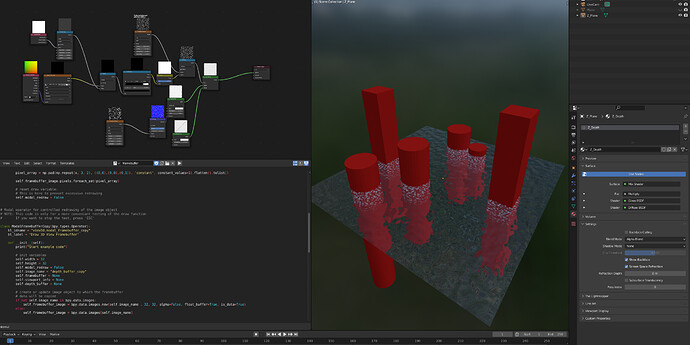

And as a input for foam:

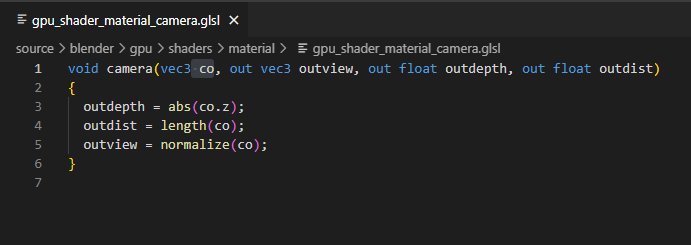

Also as a sidenote with what’s the actual difference between camera data node outputs: