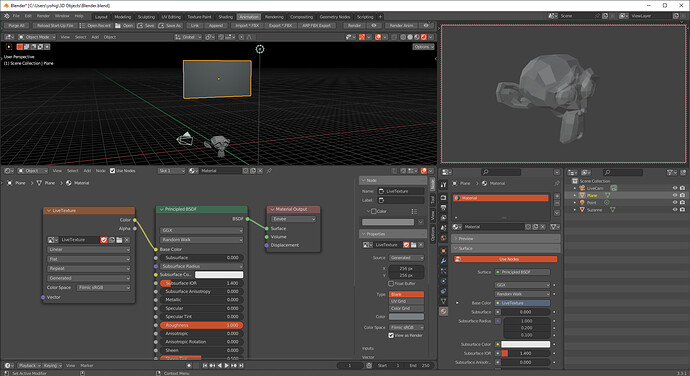

LIVE SCENE CAPTURE → IMAGE TEXTURE

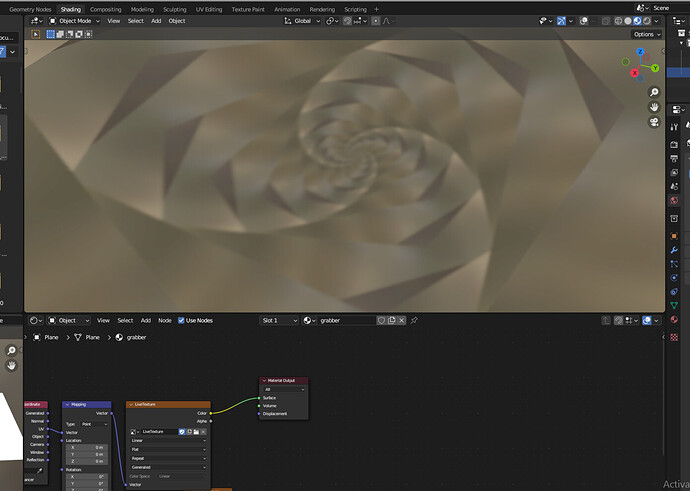

Lets talk abour rendering your scene in realtime and outputting the result into a texture. This is a common technique used in games for portal effects and whatnot but it is not an essential feature to have in offline rendering software like Blender. There still seems to be demand for this technique though. This post has been prompted by the frequent requests to break this effect down on a twitter post:

https://twitter.com/kolupsy/status/1525811584718741505?s=20&t=2eDLtjrgVcKw-TmsQXDutA

To take on this effect we make use of a hidden but very potent part of the Blender API: the GPU module. You can find out more about it here.

If you look at the many examples posted there you will find snippets that generate images from offscreen rendering and ones that capture the scene view from a camera and display it as a little window on your UI. By combining both techniques, we can achieve the “live texture” effect.

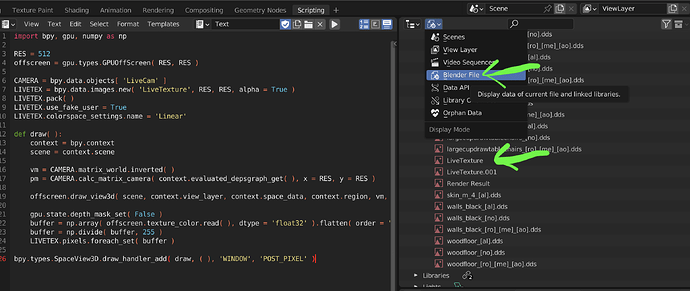

Below is the code that I used in the twitter post. Before running it you need to make sure to have a camera object called ‘LiveCam’ in your scene or else the script wont work properly.

import bpy, gpu, numpy as np

RES = 512

offscreen = gpu.types.GPUOffScreen( RES, RES )

CAMERA = bpy.data.objects[ 'LiveCam' ]

LIVETEX = bpy.data.images.new( 'LiveTexture', RES, RES, alpha = True )

LIVETEX.pack( )

LIVETEX.use_fake_user = True

LIVETEX.colorspace_settings.name = 'Linear'

def draw( ):

context = bpy.context

scene = context.scene

vm = CAMERA.matrix_world.inverted( )

pm = CAMERA.calc_matrix_camera( context.evaluated_depsgraph_get( ), x = RES, y = RES )

offscreen.draw_view3d( scene, context.view_layer, context.space_data, context.region, vm, pm )

gpu.state.depth_mask_set( False )

buffer = np.array( offscreen.texture_color.read( ), dtype = 'float32' ).flatten( order = 'F' )

buffer = np.divide( buffer, 255 )

LIVETEX.pixels.foreach_set( buffer )

bpy.types.SpaceView3D.draw_handler_add( draw, ( ), 'WINDOW', 'POST_PIXEL' )

Now we will get a new image in our blend file called ‘LiveTexture’ as long as their is not already an image of the same name in the same file. This image can be used like any other image as a texture for the shader graph, Geometry Nodes ect…

Limitations:

- This will only work in the viewport, material preview or Eevee render. It will not capture Cycles renders. You can modify the script to render a cycles image on every frame update but this is not recommended for obvious performance reasons

- Speaking of performance. It is not great. Now this is not exactly a hack but I am sure Blender was not built with “texture streaming” in mind. Large captures with a lot of samples will tank preview performance fairly quickly.

- The script above does not handle On-Render updates, they can be implemented using app handlers altough I have not tested it myself yet.

- The capture will always include the entire visible UI. You can toggle of overlays if you want to capture only the relevant parts of the scene.

Tips:

- Use 1 or 0 Eevee viewport samples. Each viewport sample contributes to the performance load of the capture so keeping it low will help with performance. 0 samples allowes you to have unlimited samples in your scene but the capture will still only use 1 (very useful).

- Disable viewport denoising. This will make your viewport less slushy and clean up your capture.

- Change the resolution in the script to your liking. It currently is set up for a 1:1 ratio but you can modifiy the script to have any resolution you want. Just keep it low. 256x256 can be heavy enough. 512x512 has worked great too in simple scenes. Just try to find the limit for your scene.

- If you want to iteratively make changes to the script, either make sure to reopen Blender with every update of the script or implement script updating yourself.

Final remarks:

I personally dont find this technique too useful, it is a bit janky especially in its current state. BUT I will gladly listen to what you have to say about it and why it is ACTUALLY useful. I would be open to expand on this idea given a good enough reason to do so.

If you decide to do something cool with it, then I am very glad to have helped. Be sure to tag me on twitter so that I can see what cool things you were able to do with it. My twitter handle is @kolupsy. You can also follow me on twitter where I am most active on social media and often post funky stuff like this.

https://twitter.com/kolupsy/status/1525924648168333315?s=20&t=2eDLtjrgVcKw-TmsQXDutA