Hi all, so this will be another of my epic posts (in terms of length), as such, clear the calendar, make a mug of coffee and find a comfortable reading position

Mods: I’m posting this here as it covers multiple areas, from modeling to animation, simulation and rendering. I thought it best to keep it all consolidated in one place rather than spread out all over the place.

I would like to discuss the subject of an Animation Workflow/Pipeline in Blender and not just some high level flow chart (like Animatic → Layout → Animation → Render) but more the detailed process of commands, file formats, step-by-step procedure, order of modifiers in the stack and if there’s more than one way to achieve a similar outcome, along with the pros and cons of each method.

A key factor in the workflow is likely the ability to link one asset (Blender file) to another file so that any change made to an asset is automatically updated across all production files which use that asset. With that in mind, for all of the production steps below, is there only one way to link assets/production data (such as key frame animation or simulations), are there stages were even if you can link the data, there’s a good reason not too and are there any cases were it would be really nice to be able to link the data but you just can’t?

Character Model

Clearly in order to animate a character, one would usually have the model (mesh) with a full shader setup and complete rig in a single Blender file (Collection) for file linking and Library Overrides. However, I’m aware that in many studios, the actual mesh, shader setup (also called surfacing) and the animation rig are all in separate files and then combined to create the final full character file.

A couple of advantages are obvious, namely you can have different people working on each part somewhat at the same time. You can adjust the shader setup without fear of messing up the rig or it getting in the way.

But it raises the big question of HOW do you do that. Are you linking in each part or importing/appending it to a final file? How is weight painting being handled, you don’t want to have to do redo that each time or workout where it went wrong if something about the mesh file was changed? Where are corrective shape keys stored, the mesh file, the rig file, the final file and again, what happens if the mesh is ‘updated’?

Or at the end of the day, is it just better (easier) to just do it all in a single file for a small (solo) studio?

Character Simulation Animation

Following on a bit with the character workflow, lets say you have the character linked into a file with a rough layout (more on that later) and whatever else is needed to start the actual process of character animation. You then key all that animation (likely using the controls on the rig) which moves the mesh just how you want it to be, however loose objects like cloths and hair usually aren’t hand animated, they are simulated instead.

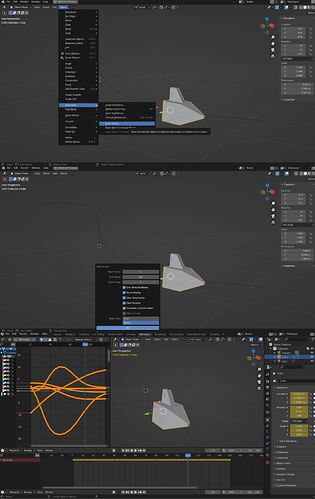

The general wisdom is that any simulation is done in a separate (new) file with the exported (baked) animation of the character and any additional objects, settings, etc that are required in order to produce the simulation.

For the most part this is usually done at a larger scale in order to allow for fine tuning of the simulation settings.

This therefore raises a few questions:

- Best way to link/import character and scale to 5 times larger

- How to ‘copy’ baked animation from animation file to simulation file (will it still work correctly if character is now 5 times larger)

- Best way to export final simulation baked data and scale back down for final rendering

Are there also different things to consider depending on exactly what is being simulated for, be it cloth or hair or liquids/fire/smoke, outside of the specific settings for each simulation type?

Layout

Based on the shot list, storyboard, concept art and animatic a Rough Layout file would be put together using just enough linked assets and directly added objects, such as the camera, a ground plane, maybe some walls, etc in order to establish the basic scene for the action along with starting and end position of the camera and any characters that need to be animated.

Now while it’s only ‘Rough’, it does pretty much become the blueprint by which the rest of the production (Character Animation, Final Layout-the overall look of the background environment and the final lighting and rendering) will be based on. In fact in many ways it almost sets everything else in stone, since if you go back and change the camera position or character(s) position or add/remove a significant scene object (something that a character is looking at or worse, interacting with), then anything already done down the line is at best wrong or at worse totally broken depending on how and what has been linked back to the Rough Layout file.

So, for the Character Animation, does this mean the Rough Layout is linked mostly as ‘reference’ while the character(s) are library overrides for animation? If so, what’s the best way to ‘link as reference’ and do you even want it as a live link, in that any changes made to the Rough Layout file would be automatically updated in the character animation file?

One assumes that much the same ‘reference’ process would be used to then create the Final Layout file which will be used for lighting and rendering.

Lighting and Render Prep

This is generally were everything gets pull together and final lighting and polish is done before then rendering out each frame. The general wisdom for the final rendering file, seems to be to make it as simple and light as possible. By that I mean they bake all of the animation, etc directly onto the base object/mesh data, removing all the complexity of rigs, key frames, interpolation, etc so there is just nothing else that can maybe go wrong during the actual render process.

If that is the case, then once again we have the question of HOW. In general you still only have each character asset, which has been mostly linked throughout the production process, so how do you now just pull out the basic mesh objects and then somehow apply the animation that has been done in Pose Mode on the various animation controls as part of the overall rig/armature and bake all that into something that I guess just moves vertices from frame to frame?

I think that’s mostly it and yes, long post, so well done and thank you if you read this far without skipping bits. I’m looking forward to any insights to any/all of the above.