That’s fantastic if true. In that case I can build a new computer with an AMD processor and Nvidia RTX to get the best of both worlds once I can afford it.

quite sad that nvidia don’t share their code too. maybe they its not possible because of turing architecture ?

Both OIDN and the OptiX denoiser are equally closed. It is practically impossible to modify them. OIDN is more open on paper, but not much more. In deep learning openness means that you publish not only the model as Intel did, but you also have to publish the training code and the training data. If any of those are missing, it is not possible to reproduce the results and as such it is not open.

Is there already a consensus about the best simple OIDN denoiser Compositor node setup? I mean one you can remember and manually construct within about 30 seconds.

If you want, I will soon release the AI denoiser from E-Cycles on the Blender Market and Gumroad soon for 10€ first.

- It takes care of all the work for you,

- allows to quickly switch between different quality level,

- uses about a half of the memory needed in the lord odin setup while offering same or better quality

- can integrate the node setup in existing compositing node tree and even handle multiple render layers.

I’m warming up for E-Cycles more and more.

Will it be the OIDN denoiser, OptiX denoiser, maybe both, or maybe a custom-coded denoiser?

anyone know why he have such a bad result ?

https://twitter.com/MjTheHunter/status/1165250610741968902/photo/1

on the white ball the 1 sample example is really bab seems to be a node set up issue.

It’s based on OIDN and available on Gumroad.

I’ll also add options for Baking later. The addon is half price until baking is implemented, updates will be for free.

Edit: As some asks: if you already own E-Cycles, you already have all the features available in this addon and it will stay so. Both share the same code.

@DeepBlender @bliblubli @hypersomniac

out of curiosity, this type of library, could potentially be used to speed up other functions besides those of denoise rendering?

for example it could be used in eevee to speed up the rendering of some shaders, or shadows or create some raytracing and global illumination realtime algorithms etc …?

really, we need the quick creation of a preset node as soon as we get the “denoisy data” check … it is obvious that if we activate this option, we want to use the denoising capabilities.

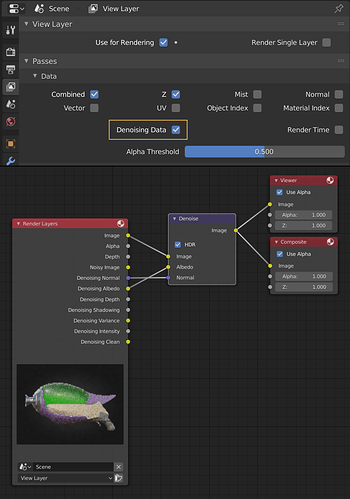

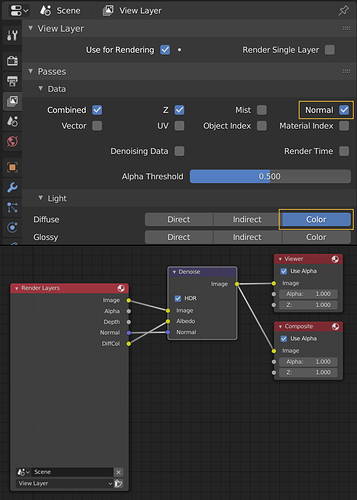

Following up my previous image, using the regular Normal and Diffuse Color passes in the Blender 2.81 Compositor’s OIDN Denoise node turns out to yield better results than the dedicated passes (Denoising Data ➔ Normal and Albedo). So here’s a new image for those wanting to try OIDN. ![]()

Do you mean neural network based solutions in general?

more or less … but using this oidn denoiser library specifically

OIDN has been trained to remove noise in raytraced images. That’s literally the only thing it can do.

exactly, and what I imagined is to remove noise from a surface … like a shader, a projected shadow, raytracied color projected onto a surface etc …

by doing so, you would have render calculations on “eevee” but at a 1/10 of what it can do now, or it can add features that currently can’t get in realtime …

another example of use could be denoising procedural textures …

Why would you have noise in procedural textures? Change the procedure then, right?

Procedural textures are being calculated on the fly, it’s not a precalculated texture that gets applied at rendertime.

What we’ve had previously (pre path tracers) was a “density information” parameter that could be utilized for stuff like distance based AA. But here we’re usually sending enough rays so that this isn’t an issue anymore (i.e. a checkerboard pattern under perspective looks pretty decent in all circumstances).

I haven’t used this denoiser yet, but if it’s final image based, can’t you use different settings based on (blurred?) matID/objID masks?

try to calculate many procedural textures with many nodes in 8k in real time ![]()

it is obvious that I am referring to a possible recovery of computing resources in scale …

Are you basically talking about a denoiser for Eevee?

yes, more or less, but at a deeper level of denoising surfaces or effects projected onto surfaces … so eevee / gpu can get more important results in realtime …

my idea is basically quite simple …

what you got at 200 samples you get at 20 samples …