I think I vaguely remember an Apple employee in an interview

who advertised at M1 Ultra 128 GB times a number that the

GPU can use, which AFAIR was more only about 90 GB

or at least less than what I had expected.

If I could find it but I remember some people reporting when M1 came out that max Vram they could use on an M1 64 GB was 40GB or so.

Or example

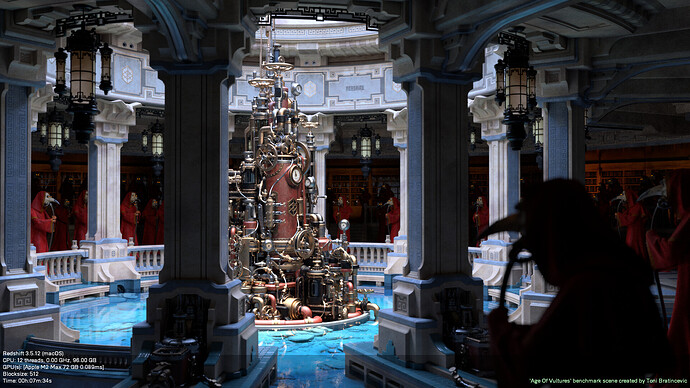

96 GB but 72 GB Vram.

128 GB seems to equal 96 GB Vram

Apple doesn’t document this and I haven’t heard a single executive talk about it, but it’s very easy to test with Activity Monitor. And I can tell you that Apple allows the GPU to pull almost all the Unified RAM up to almost crashing the system.

I have an 16GB Apple Silicon device and I can note system memory at Blender idle and then render a super heavy scene and note the difference in system memory and swap. And like I said it’s close to the max amount minus what the OS and Blender were using idle.

Is it possible that the real optimizations for the M3 and RT cores haven’t really happened yet, and performance will increase over the next few months?

That would be great if more speed comes, cause seeing some of the gains for the Max chip while rendering posted across the web like the Mani video already have me gushing. ![]()

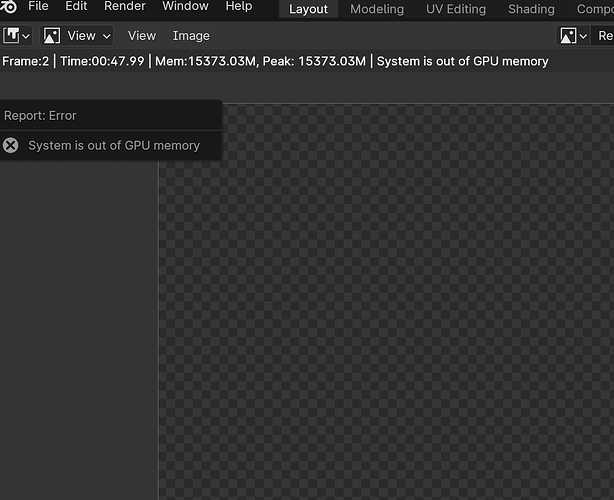

Well I don’t know, when I tried to push past 15GB on the 18GB m3 using gpu rendering in cycles, it crashed with out of memory message…

There will definitely be some performance gain in octane and unreal. But I’m not so sure about cycles.

Yes, that is what I thought.

I think that’s expected behaviour. The system would only have 3GB left for everything else in that case.

Sure but doesn’t this mean ‘out of core’ rendering is impossible for gpu? Like on a PC with a dedicated gpu once the vram is full, gpu can still render while swapping with system ram. This incurs heavy performance penalty but it works.

Mac runs out of memory for gpu and it has no ram to swap with, it just crashes. CPU maybe can swap with ssd but the performance penalty is probably much greater, I don’t know haven’t tested and not interested in cpu rendering as it is already too show…

Yes what I mean, have not done it on this one yet but on the M1 Air I did managed that a lot.

Pytorch has the same effect if you try to use too much memory it just crashes.

The again it is normal the OS need some memory to run it can not swap everything.

Perhaps redshift sets an artificial limit to avoid that not sure.

Macs have swap and it works extremely well and depending on the speed of your ssd it has been shown to be almost as fast as the RAM.

You have a good point that the GPU may not be allowed to use swap as Apple maybe putting all system memory in swap for large scenes and the GPU max once reaching actual RAM level maybe crashing your system.

But this still maybe not the issue and something else is happening in your case. I have still yet had a crash for large scenes on 16GB so don’t let your crashes be a cause and effect error.

well cycles said not enough memory, so I don’t know what else would be the case.

Ah, I didn’t catch that in your post. So yeah valid argument on your side. Or possibly there is a limit to how much swap the GPU can use? Interesting.

Seeing it settles at 15.7, means the 18gb model is maybe 1 more gb from being able to render this lol

Edit: I’m gonna try again just to make sure.

Yes maybe, I thought upping the res to 1440p instead of 1080p would crash it but no, “only uses 16 GB” then.

Even 4k barely goes up to 17 GB.

Anybody wanna fire up Disney’s Moana scene on their 16GB AS machine to test? ![]()

I wonder what the actual texture sizes are for that scene and if you could upscale them to 4K in Affinity if they aren’t already and link to those.