Does Cycles love more GPUs? Where is the point of diminishing returns?

I’ve been wanting to know if I can incorporate raytraced renders into more parts of my creative process, rather than just final renders. I have a particular interest in lighting and its effects (SSS, caustics, AO, etc.). With Optix and adaptive sampling, the number of samples needed for a good image is rapidly coming down. Nvidia RTX 3000-series cards look to have 4x the RT processing power, further accelerating renders. Cycles will never been an online renderer, but it’s getting closer. Even in the online render world we’re seeing impressive use of raytracing. Unreal Engine has had RT since v4.25 and v5 has some amazing new features.

After my last test of PCI-e risers and their effect on render times, I wanted to understand how Cycles scales with multiple GPUs. Can I just keep adding more GPUs or am I wasting money after adding a few? I’ve seen many comments on forums (including this one) that there is a steep falloff in performance when you add more than 2-3 cards, which is why you don’t often see high-end workstations with more than 4 GPUs. Cycles being a tile-based renderer, however, I expected that (like scaling with CPU cores) Cycles should scale fairly well with more GPUs.

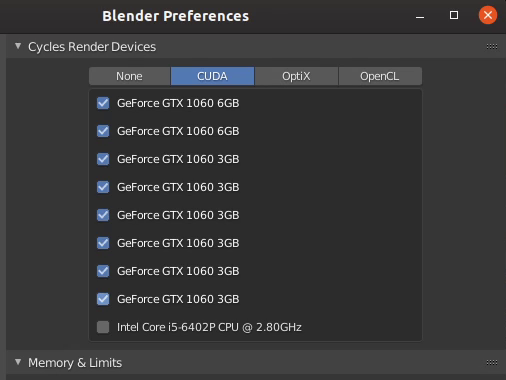

For this test I used 8 x Nvidia GeForce GTX 1060 video cards connected to a cryptocurrency mining motherboard that can handle up to 13 video cards. I used a 6th-gen Core i5 quad core CPU and 8GB of RAM running Ubuntu 19.10.

Setup

- Asrock H110 BTC+ Pro motherboard

- Intel Core i5 6402P quad core CPU

- 8GB DDR4 RAM

- 8 x Nvidia GeForce GTX 1060 (3GB or 6GB)

- 8 x Generic Chinese PCI-e x1 USB risers

- 2 x 800W Raidmax Gold PSU

- Ubuntu 19.10 x64 with Nvidia GeForce drivers 440.xx

- Blender 2.82a

Method

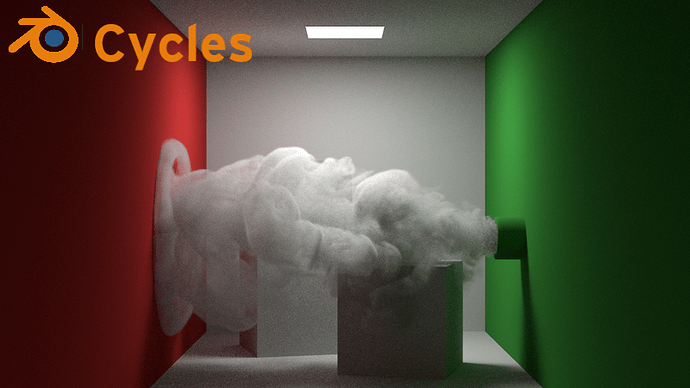

I wanted to use the Junk Shop splash screen scene that I used in the PCI-e riser test, but that scene uses about 6GB of VRAM, and not all of the cards in the test had 6GB. The most complex demo scene I could find that would fit within 3GB of VRAM was the Blender Classroom scene, which uses about 1.2GB of VRAM. I tested at 50, 150, 250, 500, and 2500 samples with 1 up to 8 GPUs.

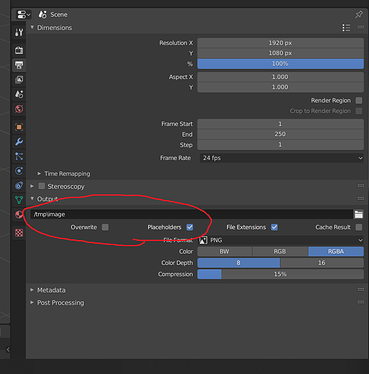

I set the Cycles Render Devices to CUDA (Optix is not supported on these cards) and selected however many cards I wanted to use for the test, starting from 1 and going all the way up to 8.

Settings were: Cycles for render engine, Supported for feature set, GPU Compute as device, and Integrator was Path Tracing. Denoising was not enabled. Everything else was as-is with this demo .blend scene. I did not adjust resolution or other settings. I ran each timed render at least twice and took the average.

Caveats

- The classroom scene is a benchmark scene, which may not be representative of the work you do

- Also, this test reveals a general idea of scaling, but some scenes may scale differently

- I only used PCI-e x1 for all cards. As found in my PCI-e riser test, performance is considerably lower at very low samples (e.g. 50) when using x1, but above ~325 samples, this drops to around 6% and stays there. In this case I wasn’t looking at overall speed, but scaling. Using PCI-e x1 slots in this test should affect scaling but it did reduce performance in all scenarios accordingly.

- Blender uses increasing amounts of CPU as GPUs are added. Adding a fourth GPU was enough to use 100% of the i5 quad core CPU. Strangely, on my dual Xeon system, 7 GPUs only used about 12% of the CPU, which is equivalent to about 50% of the i5’s CPU power. I’ve asked Blender devs about this, but have not received an answer. The 100% CPU utilization did not seem to have much impact on render times, but it did make the scaling picture look worse than it should at 4 GPUs and above.

- This was tested in Linux, but Windows performance was very similar.

Results

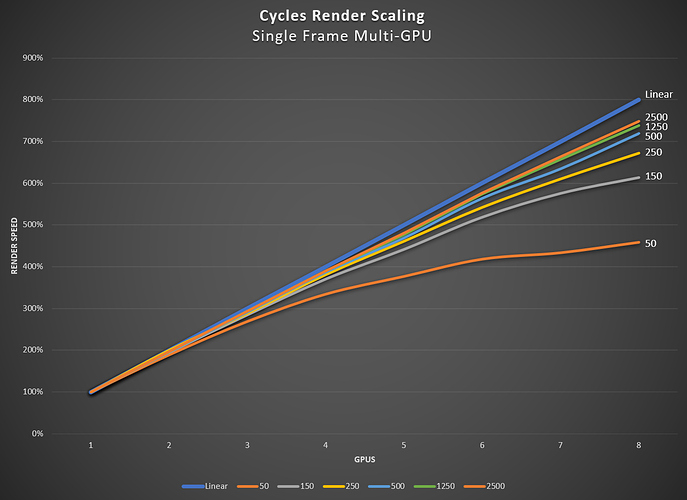

- The single frame with 8 x GTX 1060 at 1250 samples rendered in 5 minutes. A single RTX 2080 Ti with Optix acceleration took 6 minutes.

- Low samples (e.g. 50) do not scale well past 3 GPUs, as expected. There’s just not enough rendering to be done compared to the loading of textures, geo, etc.

- High samples scale well all the way up to 8 GPUs. The higher the samples, the more linear the performance gains. At 1250 samples 8 cards resulted in 7.4x the performance of a single card.

- The sweet spot for renders around 250 samples is 6 GPUs in this test.

Thoughts

First, unless you are doing very low sample renders, Cycles scales quite well when adding more GPUs. There’s no reason to stop at 4 GPUs out of fear of diminishing returns. Unless you’re typically rendering at samples <200, you’re better off with >4 videos cards if your motherboard can handle it and you can afford the cards.

Second, scaling doesn’t need to be expensive. In this test I used a $60 used CPU on a $60 used motherboard and video cards that go for about $130 each on eBay (an especially good value if you get the 6GB versions). The total cost for the 8 GPU test system was $1300. For about $100 more than a single RTX 2080 Ti you get a full system that is 20% faster than the 2080 Ti when rendering moderate to high samples, even when using Optix acceleration. Eight cards do consume more power than even the power hungry 2080 Ti, but it’s not especially high. The full system with all 8 cards rendering used around 800 watts. Of course, you have much less VRAM than the 2080 Ti, but most Blender scenes seem to fit in 6GB of VRAM.

Finally, I was happy to see how well Cycles scaled. In a couple of weeks, I should have more RTX cards so I can use raytracing in even more areas of my creative process. Once I get them, I will do a quick test with RTX and Optix to see if the scaling pattern is similar.

I’ll have more posts like this. I have a lot of questions that I’m exploring and thought it would be fun and informative to others if I post my results.

I’ll have more posts like this. I have a lot of questions that I’m exploring and thought it would be fun and informative to others if I post my results.