Optix for Nvidia GTX Video Cards is Here: The Good and the Bad

Optix has shown impressive acceleration in Cycles rendering (30-45% faster than CUDA in my testing), and in denoising, but these features were only available for Nvidia’s RTX cards with their ray tracing RT cores.

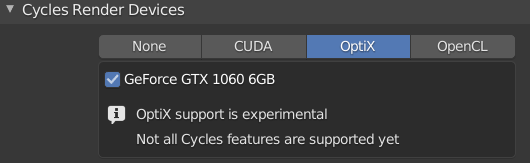

Now, Blender 2.90 now includes Optix support for Nvidia GTX video cards (actually any Maxwell or newer card). In Blender this affects two things for GTX owners: viewport denoising and Cycles rendering.

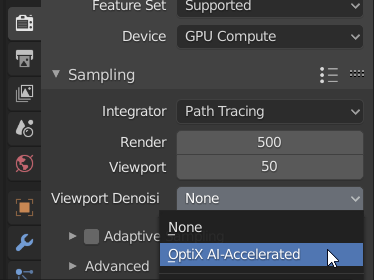

Optix AI-Accelerated viewport denoising is the most apparent. With very few samples, you can get a clean (though with painting-like artifacts) ray traced image, even on an older video card. This makes working with ray traced scenes much more accessible. GTX and RTX owners alike will love this feature. Note that Optix AI-Accelerated denoising uses only works with Nvidia video cards. The Open Image Denoiser (Intel denoiser) is also coming for Blender 2.90 (viewport and render process) and will work with any video card and CPU.

The other area where GTX can now use Optix is with Cycles rendering. In the Blender 2.90 alpha Optix rendering is now enabled for GTX-series cards. When Optix uses the RT cores in an RTX card, the gains are huge: 30-45% faster in my testing. Without RT cores, I was curious to know whether using Optix for rendering would have any benefit for GTX users.

For this test I used a Zotac Nvidia GTX 1060 6GB video card on an Intel H110 chipset motherboard with a Celeron G3920 CPU and 16GB of RAM running Ubuntu 19.10.

Setup

- Asrock H110 BTC+ Pro motherboard

- Intel Celeron G3920 dual core CPU

- 16GB DDR4 dual channel RAM

- Zotac Nvidia GeForce GTX 1060 6GB (PCI-e x16)

- 800W Raidmax Gold PSU

- Ubuntu 19.10 x64 with Nvidia GeForce drivers 440.xx

- Blender 2.90 alpha (06/06/2020 build)

Method

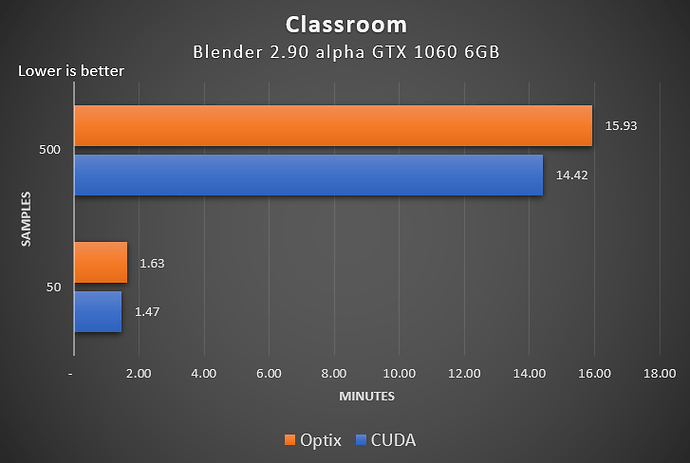

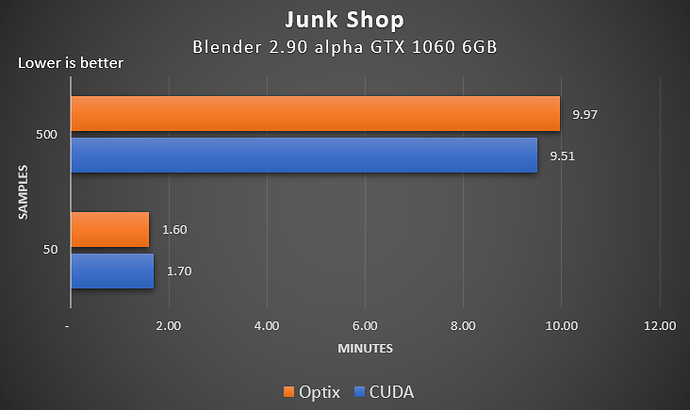

I tested the Classroom and Junk Shop demo scenes at 50 samples per pixel and 500 samples with CUDA or Optix rendering. Denoising was off, and all other settings were the default of the demo files.

Caveats

- As always, the scenes you typically work with will be different from the demo scenes, so you may see different results in your work.

- This was tested with Blender 2.90 alpha. Comparing CUDA rendering times between 2.83 and 2.90 alpha did not show any significant difference in render times, but as 2.90 is in alpha there could be changes by the time it is released.

Results

- Without RT cores, GTX cards do not benefit from any acceleration over CUDA when rendering with Optix

- Optix rendering with GTX was generally slower than CUDA rendering, in the range of 5-10% depending on the scene

- Only in the 50 samples Junk Shop scene test was Optix faster, and then only by 5% over CUDA

- The number of samples had almost no impact on the results

Thoughts

The addition of Nvidia’s AI-Denoising in the viewport is very useful addition. It works well on GTX cards, and is a great feature to bring to Blender users.

Optix for Cycles rendering, however, probably should not have been enabled for GTX. In most cases, it makes renders slower. The performance hit is not great, so there isn’t a big risk to GTX users choosing Optix thinking that it will accelerate their renders, but there’s no benefit either. Perhaps there are some specialized situations where Optix rendering on GTX would make sense, but these benchmarks do not show that.

As usual, if there are more things you’d like me to test, let me know in the comments below.