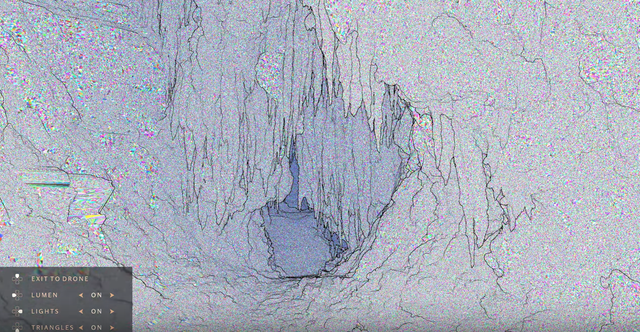

This is a suprising and amazing trailer of their next gen graphics using with Lumen and Nanite new features.

Letting people use million polygons meshes directly from Zbrush in game without needing to make LOD or normal maps, running with fully real time global illumination.

Some official site details

The PS5 next gen bandwith and architecture should help and drive future PC motherboard design

Some features details

Thanks! Not vector displacement or tessellation. That was my plan for this problem for many years but it’s not general enough. Stay tuned for more info.

https://twitter.com/BrianKaris/status/1260660677036957697

Can’t share technical details at this time, but this tech is meant to ship games not tech demos, so download sizes are also something we care very much about.

https://twitter.com/gwihlidal/status/1260597164318711808

For me it looks almost more like displacement maps fro micro polygons details

." Jerome Platteaux, Epic’s special projects art director, told Digital Foundry. He says that each asset has 8K texture for base colour, another 8K texture for metalness/roughness and a final 8K texture for the normal map. But this isn’t a traditional normal map used to approximate higher detail, but rather a tiling texture for surface details.

“For example, the statue of the warrior that you can see in the temple is made of eight pieces (head, torso, arms, legs, etc). Each piece has a set of three textures (base colour, metalness/roughness, and normal maps for tiny scratches). So, we end up with eight sets of 8K textures, for a total of 24 8K textures for one statue alone,” he adds.

And shadows virtual textures

“Really, the core method here, and the reason there is such a jump in shadow fidelity, is virtual shadow maps. This is basically virtual textures but for shadow maps. Nanite enables a number of things we simply couldn’t do before, such as rendering into virtualised shadow maps very efficiently. We pick the resolution of the virtual shadow map for each pixel such that the texels are pixel-sized, so roughly one texel per pixel, and thus razor sharp shadows. This effectively gives us 16K shadow maps for every light in the demo where previously we’d use maybe 2K at most. High resolution is great, but we want physically plausible soft shadows, so we extended some of our previous work on denoising ray-traced shadows to filter shadow map shadows and give us those nice penumbras.”

Some compite shaders perhaps

“The vast majority of triangles are software rasterised using hyper-optimised compute shaders specifically designed for the advantages we can exploit,” explains Brian Karis. “As a result, we’ve been able to leave hardware rasterisers in the dust at this specific task. Software rasterisation is a core component of Nanite that allows it to achieve what it does. We can’t beat hardware rasterisers in all cases though so we’ll use hardware when we’ve determined it’s the faster path. On PlayStation 5 we use primitive shaders for that path which is considerably faster than using the old pipeline we had before with vertex shaders.”

Another big change is also the new licensing.

And the totally free multiplayer services free for any Unreal user.