Well. Those nanite meshes are supposed to compress real well. So, meh, shouldn’t make that much of a difference. In fact, might actually be smaller since you now don’t need to normal map every single little thing. And you can use tiling textures more because you can now have mesh bevels instead of doing that with textures. Bevel! The tech of the future!

The engine calculates huge meshes faster, as it seems, than faking geometry details with displacement via shader, also, if you really want, you can further reduce the polygons with something like Houdini’s PolyReduce, that can be weightmapped, so that you can preserve details where you need them.

Nanite still supports Normal Maps, so you can still add smaller details to your meshes without increasing the polycount to an extreme level.

I know that displacement is technically not “real geometry”, but based I what I have seen, the whole idea of runtime procedural generation to reduce the file size of scenes (as well as the creation time) seems to have been thrown out the window.

I mean look at all of the praise Houdini gets just for its procedural generation, I would think today’s hardware is more than sufficient for games to use procedural data to not only shave gigabytes off of the size of AAA games, but also shave millions of dollars off of the cost of creating the assets and the worlds (as you would be able to generate the same amount of content with smaller teams over a shorter period).

If you remember, not even the Substance materials are used to generate textures on runtime, they are all baked down to individual maps when you use them in engine. For Houdini you can procedurally generate rocks or larger structures, but they are static objects, you need to cook the object first, is not something that you can do on the fly, it requires a lot of memory too, the starting data may be light, but the generated data is not, where do you store it?

Tessellation has been around for at least 10 years now, and now there’s also such a thing as vector displacement.

In addition, runtime procedural content has been seen in games for phones. They are simple operations in those cases, but we live in an age where (for the PC) Octo-core processors with 16 threads are becoming mainstream, consumer-grade 16 core 32 thread options are also becoming more common. If that is not enough, there’s rumors of Zen4 coming out with a 24 core 48 thread consumer model.

Even Blender’s own Eevee engine can render entirely procedural materials with real-time framerate, and the same applies to meshes that use the modifiers to create data at runtime as well. We need to acknowledge the reality that few are willing to spend thousands of dollars on storage alone so they can have a library of games each weighing in at hundreds of gigabytes (unless we have a breakthrough in SSD/M.2 storage density for consumer models).

With Displacement you have to subdivide the mesh to get a decent result, and it’s still a rough representation of the details, and you need a normal map too to get smaller details to be readable, with this you can cut down a map, which usually is 16bit (4 bit per channel).

The procedural stuff you are seeing is probably some kind of pre made models, that are fed into Houdini Engine, and then are arranged depending on how you structured the tool. Have you seen how fast it updates the scene when you do a change, and we are talking about “simple” stuff:

It’s not feasible for real time usage inside a game.

Yes.

Fine detail in any texture btw, but specially with normal maps tends to result in much less redundancy in terms of color data, i.e. that’s fundamentally harder to compress. The average difference between albedo/orm to normal in most stuff I paint is at about 1,000 to ~10,000ish, and I’m fairly minimalist.

Filesize though doesn’t matter once the data is decoded and loaded in memory. So take out a texture and bam, more space to use for geometry. Potential gains then wouldn’t be limited to just plain download size.

So again, yes. Good point.

Based on what I read, one of the biggest concerns in the expectation to sculpt everything is that Unreal users will no longer be able to accelerate the development of assets (and cut down on file-size) using tiled textures (if they want displacement).

Let us look at the problem in the context of a terrain, you can easily argue the idea of an engine vendor being unreasonable if they expect you to multires sculpt a terrain the size of a city (as opposed to just using high-resolution displacement maps with splatting). What that means is that the workload for indies in this case is greatly increased and would require the hiring of one or more people whose entire job would be sculpting.

It would be the same with buildings as well (which also benefits greatly from using tiled displacement textures). Then there’s the fact that the asset may have to be close to its final form when the actual material development and detailing starts (which further increases the workload).

You can buy good terrain scans and assets pretty cheaply now, if all the free Megascans stuff Epic is offering isn’t enough.

Who is feeding you this information?

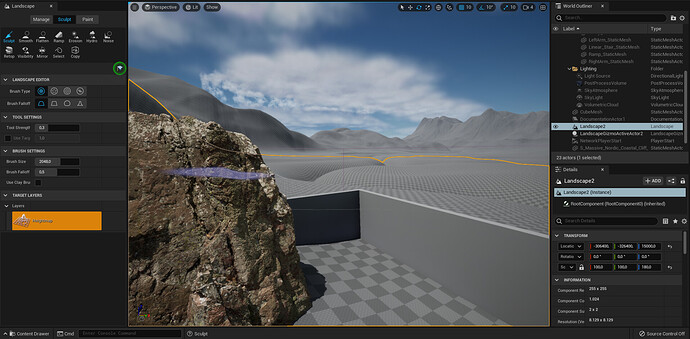

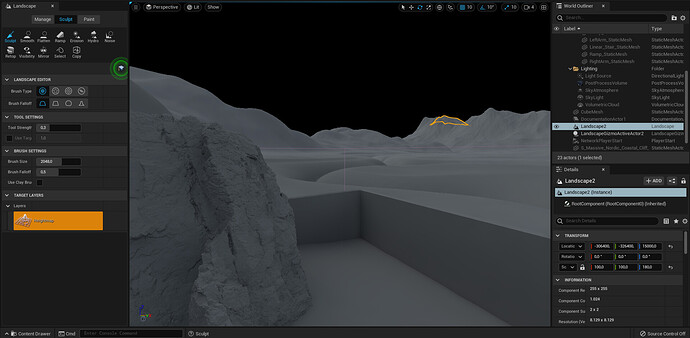

The Landscape (terrain) is still there in UE5, and it works with Heightmaps and Lumen.

(Lumen Global Illumination view)

I assume you have seen threads like this one now?

Perhaps more disturbing than the removal of runtime non-destructive/procedural workflows (for purposes such as minimizing asset creation times and file size), is the highly elitist tone of some of the Unreal fans (where a person looks down on others because their workflow uses features they have no personal use for). I no longer need feature X and I can afford to spend the thousands of dollars needed to take full advantage of the new workflow, therefore no one should be allowed to have it.

You can still use tiling textures, just try it, and again, it works with the Landscape.

I don’t understand what the problem is, these people complaining aren’t going to make a game that requires such high level details with every part of it made of “hero” assets anyway. If they don’t like it, or can’t keep up with the engine requirements they can use UE4 or switch to something else, like Unity or Godot.

How many indie game studios are making games of the quality of The Last of Us (the first game) with UE4?

Where were you when UE4 (and UDK) were shipped with LightMass that required hours or even days to render Global Illumination for a level on a standard desktop computer? Game companies can use render farms, while indies have to use what they already have to cut costs, isn’t this requirement to bake lighting information to get a decent result, to be at least on par with the quality of other studios, absurd?

We know that these people are pretending to make a game, like everyone else who “uses” Unreal Engine 4/5.

This is not completely accurate. Lumen currently does not work with Landscape, but it’s planned to. Right now, landscape will only occlude Lumen illumination but won’t actually bounce light. You will see that when you switch visualization to “Lumen Scene”. You will notice the Landscape disappears, as it has no Lumen representation.

You’re right, and some Nanite meshes don’t show up in the Lumen Scene view either.

This is even more impressive than the first demo.Look at the realtime soft shadows,the amount of details in realtime.Interesting is how the clusters are working.128 polys per cluster and 128 cluster in a larger cluster …

It looks like what Euclideon’s “Unlimited Detail” technology wished it could be, that company may very well go bankrupt now because of Epic bringing the real deal (without all of the marketing fluff and with real shading).

It is a good thing that scene is primarily made with instances, as a full game with 110 unique polygons per level (with textures to match) may not even be possible to store on home hardware.

I dare you to export to FBX and import into Blender (or anything)

Actually, I wonder if export to FBX even works with Nanite meshes as I’ve not tried yet.

I was a great fan of their work, and I was badly anticipated any release. But apart from great technologists they missed the point of how businesses work in this time. ![]()

I know what you mean, that Blender asset IO is implemented in Python and is totally inefficient in some cases. Especially if we are chasing the Nanite level of detail we can leave the PC overnight exporting until dawn.

There might be some better way to overcome this problem. Perhaps ditch the concept of asset IO as addons entirely. Port everything to C++.