Well, hello again to the WIP section and Happy New Year! I suspect some of you have been wondering what I have been up to lately, or you may just be pleased I have been quiet for so long. ![]()

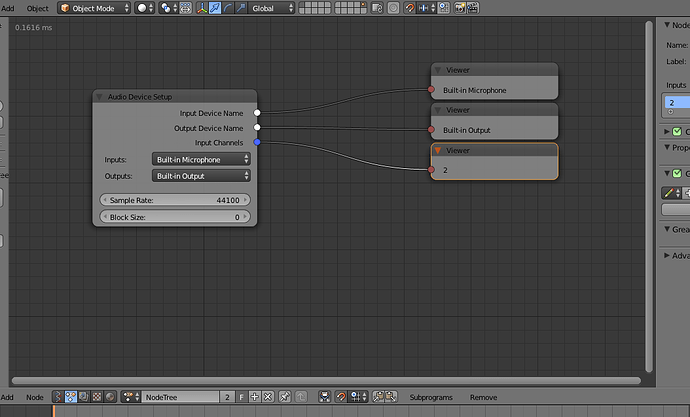

I, along with my new French friend, yes we have both got over Hastings and Waterloo ![]() and are good friends across the channel now, have been working on MIDI realtime in Blender, so I thought I would share where we are at just now, so here are some images and some explanatory waffle:

and are good friends across the channel now, have been working on MIDI realtime in Blender, so I thought I would share where we are at just now, so here are some images and some explanatory waffle:

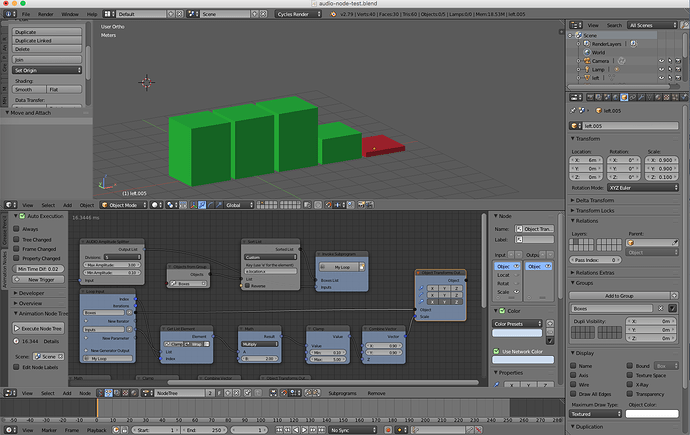

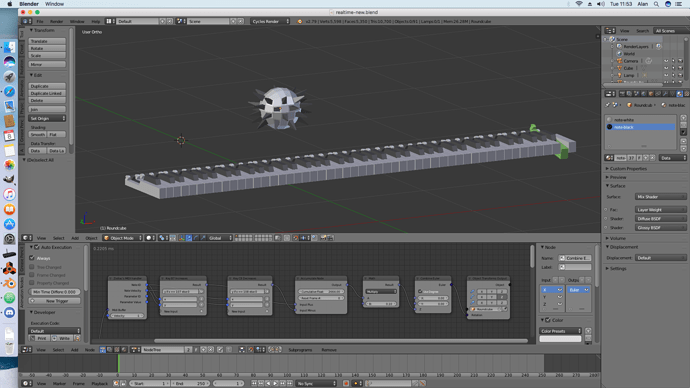

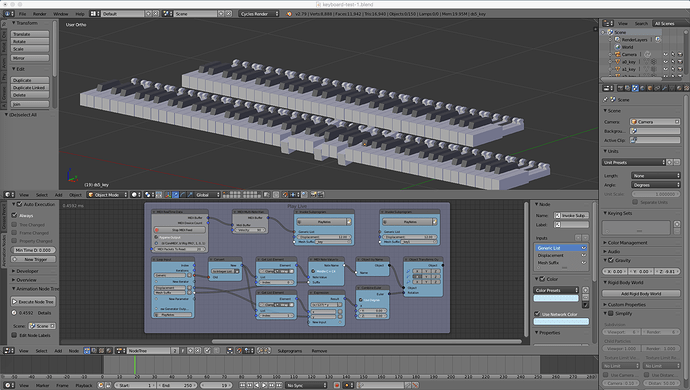

This one shows a strange spiky thing, which turns one way if you press key B7 and the other way if you press C8 - not at all thrilling really, but it all happens in Realtime from my connected MIDI keyboard. The keys on the virtual keyboard change colour as you play them using my Material Nodes in AN.

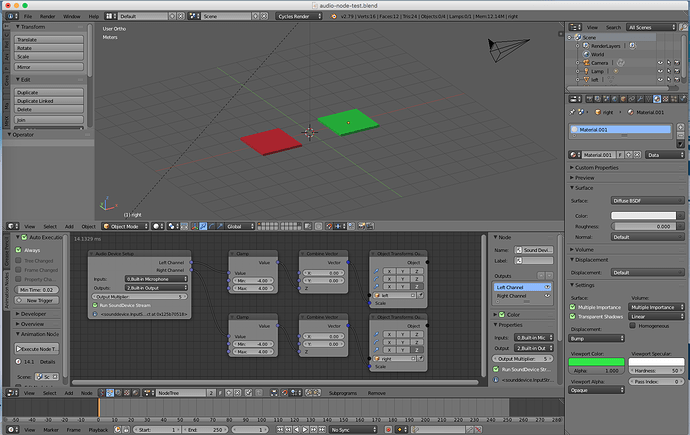

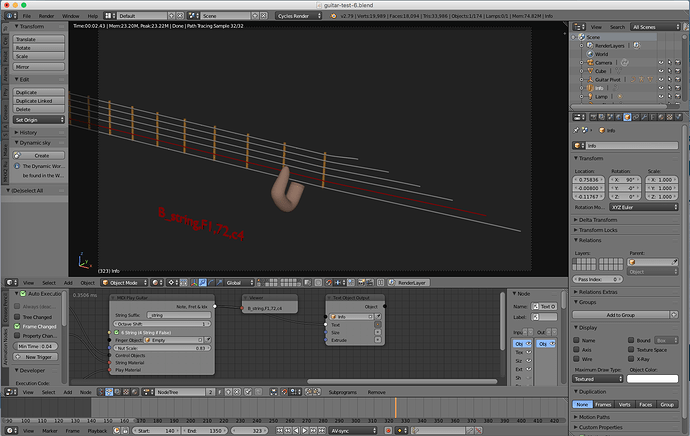

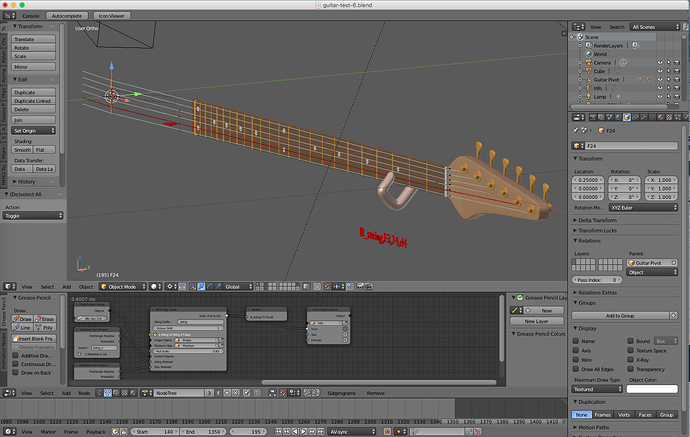

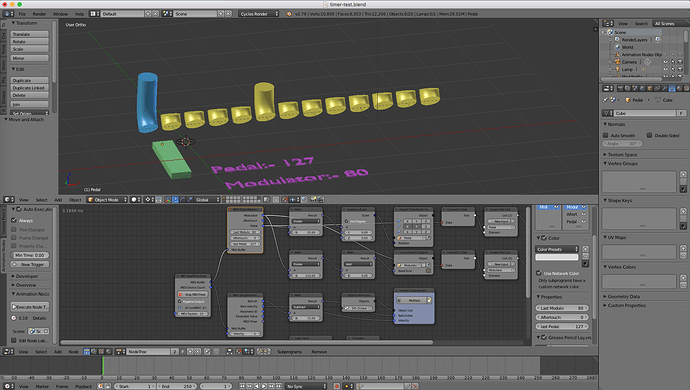

This one shows the latest Realtime system in operation, driving my virtual keyboard from my real one. We have added a function to automatically create the keyboards in either 88, or 61 key flavours. We have also added a system to rename a set of meshes so they match your keyboard to make key assignment dead easy.

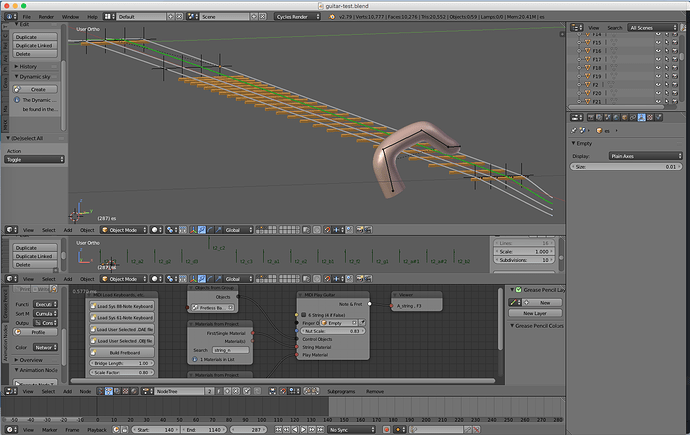

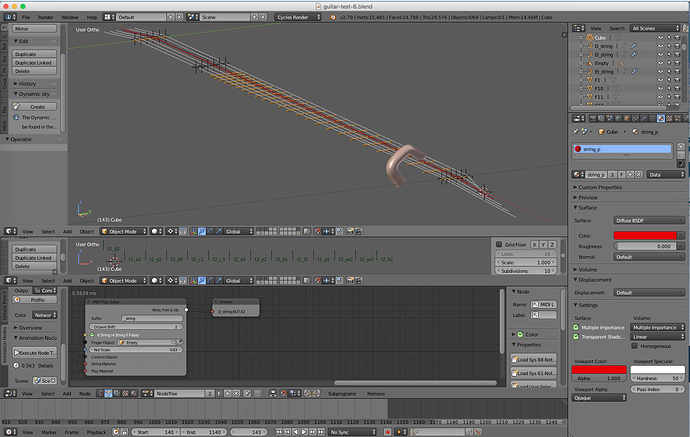

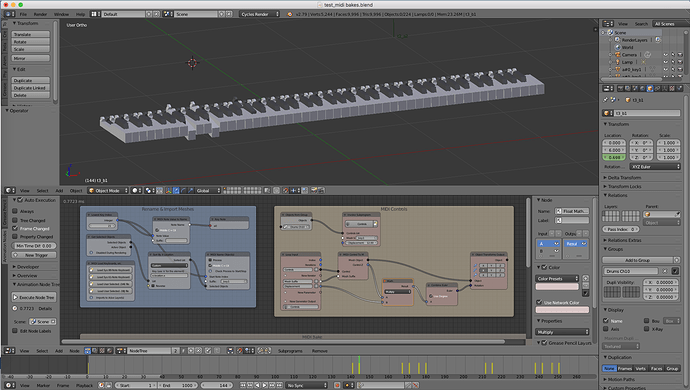

This one shows some of the new nodes driving a virtual keyboard from a MIDI file, I have re-built my system from a little while ago, so it is easier to use, more understandable and more efficient.

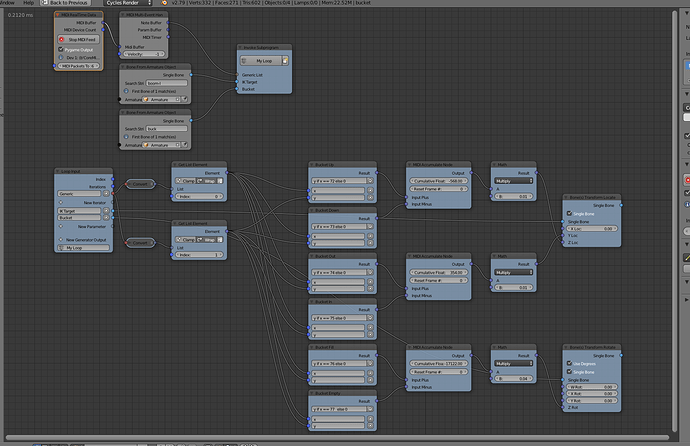

This one shows the full node tree from above, with the two methods of reading MIDI files, i.e. controls, or baked curves. Both now run much better than before, not that they were crap you understand, just could be improved.

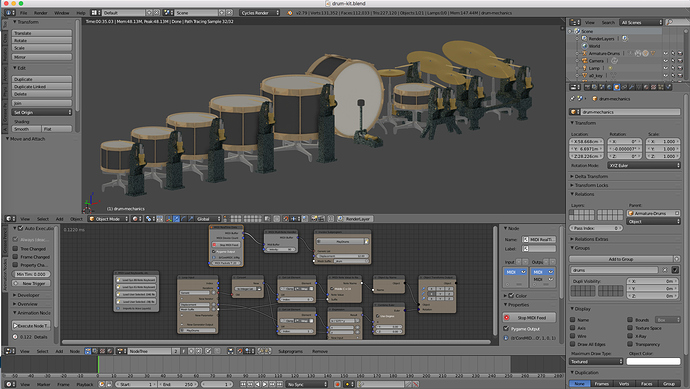

This one shows a virtual drum kit being played by a real keyboard, this is rendered, we hope to be able to run this system in 2.8, with EEVEE, once it is more stable - “it” being Blender 2.8…

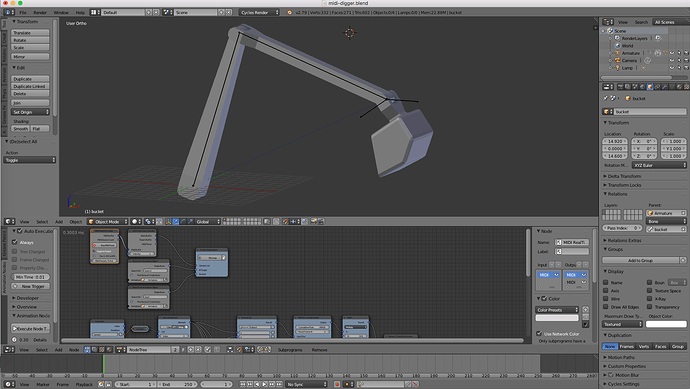

This one proves I am not b*********g and can really do this stuff. That’s Blender and Reason being driven simultaneously with one keyboard and one iRig interface. ![]()

The new system is all written in Animation Nodes and comprises 10 new nodes at the moment, this may increase as we add more functionality, like we have our sights set on Realtime Audio as well. ![]()

So, I shall keep you updated as progress is made, the system is not fit to made available yet, but we hope to have it on my Old-Git-Hub at some point later on. Also, just now you have to install Python Modules and other stuff into Blender’s Python directory and other places, all by hand and it’s a pain in the rectum to do this. We hope to have a complete install script at some stage. ![]()

Well, that is why I have been so quiet of late, so much work here an many things I just did not understand at my old age, but I am getting there with all this new Python Modules Black Magic stuff. ![]()

Cheers, Clock. ![]()

and spending quality time with Jack and Jim.

and spending quality time with Jack and Jim.