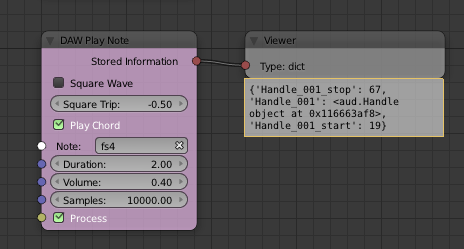

Now I have MIDI working in 2.8:

And Audio:

Much drinking and testing is now called for…

Cheers, Clock. ![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Now I have MIDI working in 2.8:

And Audio:

Much drinking and testing is now called for…

Cheers, Clock. ![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

I rebuilt the MIDAS node, so you get more 3D view to play with:

And now I use my new “Get Selected Items” node that can use either Bones, or Objects. I note purely in the interests of keeping you informed that the standard “Get Selected Objects” node in AN2.1 for Blender 2.8 DOES NOT WORK!

Cheers, Clock. ![]()

![]()

![]()

![]()

![]()

![]()

![]()

EDIT:

I forgot to say that the MIDAS node now uses DataTypeSelectorSockets so I don’t need to check whether bones, or objects are being sent as inputs. ![]()

![]()

EDIT2:

I have now tested that all my Live MIDI and Audio stuff works with EEVEE render in operation, only thing it you cannot have AN set to “Always” Execute, has to be “Frame Changed” then run the animation (ALT+A).

OK latest update:

Now I have a node that takes an incoming note name and converts that into a frequency (yes the right one) and them plays that as a note though AudaSpace. This allows me to play many notes simultaneously, something the SoundDevice did not let me do… It also has some really nice features to generate, filter, limit and set the volume of sounds.

I can also play multiple sound files at once, so a Sampler Synth may well be a possibility. I am just driving the new disgusting pink node with my “Periodic Trigger” for now, next is to animate some objects to drive the node - I think I might just play a scale as a first option.

Yay!!! I am so chuffed I got this working, keeping the volume down and stopping the node executing again until the note had finished was key to this success. I must also thank Omar (he of AN development fame) for his input to get me going in the right direction.

Cheers, Clock. ![]()

![]()

![]()

![]()

![]()

![]()

![]()

Added a new “Disgusting Pink” node:

This one plays rising, or decaying scales based upon input note and can be linked as shown above.

I have just noticed that AUD seems to be available in Blender without having to be loaded… I have never added AUD to Blender 2.8, yet it appears to be there, very curious.

Cheers, Clock. ![]()

![]()

![]()

EDIT:

I forgot to say in my senility, that I have also made the Play Notes node play chords, starting with the input note:

EDIT 2:

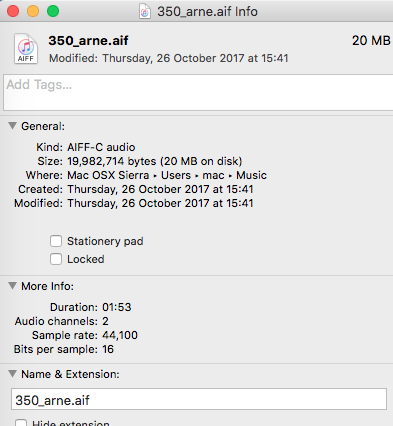

Does anyone know how I can read the “Duration” info from a music file with python? Example below:

Hmmmmm, today one of these arrived on my doorstep, I had ordered it, so no, it wasn’t “hot”.

I plugged it into my MacBook and fired up Blender, loaded one of my MIDI Realtime nodes, one of my MIDI Single Event Handler nodes and a few standard nodes and now my new toy is controlling the animation of the cube below:

Which rotates as I move three of the sliders. So now I am going to modify my MIDAS node so it can use the Korg as well as my keyboard, aren’t I going to be busy… The possibilities for this seem almost endless, with its 51 controls. ![]()

Cheers, Clock. (Where’s the “Korg nanoKONTROL 2” emoji by the way…) ![]()

![]()

I have reproduce many of the nodes from AudioNodes (an old demo system by NexYon) into Animation Nodes:

Including a multi-type Sound Generator, 5 Channel Mixer, up to 10 Channel Echo unit, Pitch Bender, Sound Joiner and Single Channel Delay. I don’t think there is a need for everything as some of my nodes do the job of several in AudioNodes.

I need to test these more before uploading to my Git-Hub.

Cheers, Clock. ![]()

EDIT:

Now I have a Volume node, High/LowPass Filter and BiQuad IIR/FIR Filter:

Just need to learn how to use it now… ![]()

That begin to look more and more as “Modular synthesizer” system. Very nice. I developped 3 modular synthesizer engine in the past and i’ll have to look at it absolutely  (I already promise it before about midi stuff you made).

(I already promise it before about midi stuff you made).

Did you plan to add VCO and LFO maybe in order to generate sound instead of using a “Sound file” ?

First, thank you for the kind comment, I really appreciate that. ![]()

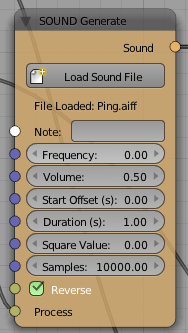

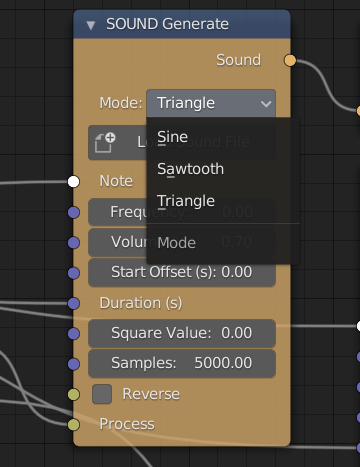

Second, here is my Sound Generator:

So with this I can:

Play a sound file, Maybe a full song, or just a snare drum for example.

Play a note, so if I put “c5” into the Note input, my node will set the frequency to 523.2511 (I think that’s right, sometimes I look at my frequency function too much), or I can specify a frequency. I can also set the Volume, Start Offset, so delay the Start, Duration and Square the sine wave used to produce the sound, then I can reverse sound clips - this I have tested with drum samples, works well.

The Pitch Bender node can then play havoc with the sound, as can the Fader, Delay, Echo unit, IIR/FIR Filter etc. the Fader can be used to set Attack and Release, I may need to work on this a little to make it part of the generator, or just leave it as is - maybe make the node so it can apply fade-in and fade-out to the sound rather than either/or as it is now, thinks this gives me an ADSR perhaps?

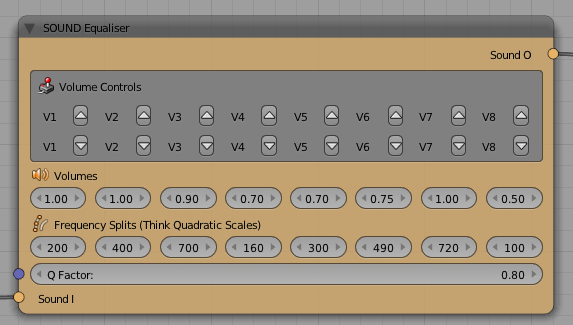

I have also now built an 8 band Equaliser:

This does not show the actual frequencies which were 200, 400, 700, 1600, 3000, 4900, 7200, 10000 - I squashed the node a little too much…

There is other stuff in AudaSpace that is not in the API, so if I could find some help here to add the missing bits, like the Triangle & Sawtooth Oscillators, the ADSR, and the Convolver would be a great start.

Next I think I should re-spec the prototype Sequencer I built into something more workable, I am also thinking to set the FPS for the Blender project baed upon the required BPM and shortest note, so it he BPM is 120 (2 beats per second) and the shortest note is 1/16, I would set the Frames Per Second to 32, Blender does not seem to mind if I run it at 34.784 FPS is that is what the maths would give me. That means that one frame is exactly 1/16th beat, so the playback would be right as FPS matches Beat.

I have absolutely no experience with C++ so rebuilding the AudaSpace API is beyond my capabilities, building the nodes is not…

Cheers, Clock. ![]()

Now I have a working prototype Sequencer that plays up to 3 notes at a time so far, I will alter this to be up to 10 - I only have 10 digits to play a piano, unless I play another one with my… ![]()

Anyway here is the image:

More later, too tired to explain it all now.

Cheers, Clock. ![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Edit:

I discovered that AudaSpace is built into Blender and Blender 2.8 comes with the latest release with all the new gizmos in it. ![]()

![]()

@warnotte here’s the new generator with 3 oscillators now:

Modifed layout for the nodes and improved performance:

I need to add Bars and Time Signatures…

Cheers, Clock. ![]()

@warnotte - Can you talk to me about LFO’s? I assume I need to generate a low frequency sound (less than 20Hz) and then multiply the original sound by the LFO sound. Only problem is I don’t seem to be able to find a function in AudaSpace to do that and “mixing” the two sound does not work.

I have also found a better way to trigger the sound generation, if the duration is greater than 0, rather than having a separate Boolean input.

Cheers, Clock.

@clockmender Yes an LFO is a VCO of very low frequency. The LFO will be used as modulator for Amplitude, frequency, phase of a VCO (or another LFO). I don’t know AudaSpace so I cannot help you.

Latest Update:

More functions, cleaned up code, etc.

Cheers, Clock. ![]()

EDIT:

These nodes are built so far:

These nodes just grew a little:

My little Mac can easily cope with 10 simultaneous notes, so I upped it to 10 notes, I also added option to Quantise the notes, or “De-Quantise” them by applying little random shifts in time and note length. then I added the controls for the Piano Roll camera so you can move up, or down and zoom in, or out.

I have also changed the Mixer to 10 channels.

Enough for today, I’m off to bed with a dirty woman, well a cup of tea and a shot of Jack Daniels actually…

Cheers, Clock. ![]()

![]()

Built some more filters:

Made my Equaliser optionally Parametric:

You can put any number of filters in there.

Cheers, Clock. ![]()

Is the midi recordable so it can be rendered? This could be a really cool system for music videos and other music based productions!

At the moment, no, neither is the output sound, but this could go on my ToDo list, for the MIDI I imagine I could output the MIDI events to a file with timings. This means understanding the vagaries of MIDI file formats and its bloody awful 7bit words!

I could think about outputting to CSV to start with. MIDI files do not use absolute timings in seconds, but use Pulse values and multiples of that based upon BPM, Time Signature, etc. I took me a long time to work these the other way around for my MIDI Bake node that reads MIDI CSV files to animate from them.

Outputting sound into a combined sound file for the project might also be possible since there is a function in AudaSpace to join files together. This would mean getting the length of any sounds played, then adding “Silence” sound between them to pad out to the correct length, doable, but a huge effort at the moment.

My focus is on getting the DAW Sequencer working well and generating sound from animated objects. I have a prototype of this working, but not ready to shout about it yet…

Sneak preview of it, still needs a lot of work:

Thanks for the comment!

Cheers, Clock. ![]()

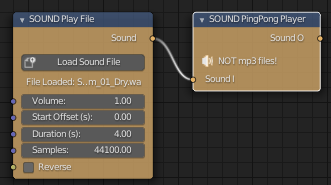

Meantime I have implemented the PingPong function that plays a sound forwards, then immediately after, backwards. This doesn’t work with MP3 files as they are totally FUBAR when played backwards…

Automatic Arpeggio generator now in place, automatically generates arpeggios, either climbing, or falling, based on input note:

Works in 3,4 & 5 note flavours. ![]()

Cheers, Clock. ![]()

Arpeggio now plays up to 9 notes, so full chord and down again, each magenta note in the sequencer plays an Arpeggio sequence. I have also added a Chord player, 3 to 5 notes based on input note. For “Fancy” chords, one would have to construct them from notes in the normal way.

Cheers, Clock. ![]()

![]()

![]()

PS. Thanks to NeXyon we will soon have a modulate() function in AudaSpace to play with. ![]()