In that case you have the volume part explicitly connected up, no?

That’s different from an implicit usage of the volume output, where you just don’t connect up anything and it magically pulls from “the” Principled Shader. But what if there are multiple?

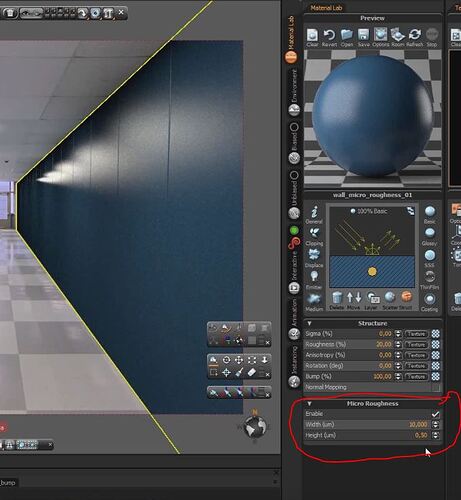

I feel like, at least at the scale this image is taken, the coarseness of the fine detail is actually too great for most of these, and you’d have to go much finer to get to roughness as opposed to, like, a normal map.

I’ma say up to like roughly 27 you can probably go withroughness (although at that point there is already quite a lot of visible structure) but 12, by the glossy shader’s standards, is already really really rough. Close to roughness 1 I wanna say.

Yes the finer/lower the scale is ,the smoother it gets.At a very fine scale the flat smooth shadeing we know is enough.However the reflection from a grazing angle looks maybe different.Maybe subtle a lower scales but as you can see from the reference chart with increasing scale it would not reflect that smooth anymore from the grazing angle.

As sayed, i have to search what the engine was.However it was from Autodesk,they have a similar option like thea for the exact micrometer roughness,for industrial/product rendering,to get the perfect haptic rendered.

look at Thea setup how simple you can set the width and height in micrometer,the roughness should have.

What is announced is an implicit volume connection when no connection has been done by user.

So, both situations are handled.

Mixing of Volumes is copied from mixing of surfaces.

This automatic solution may be satisfying in some cases.

In other cases, user will have to set up mixing of volumes, manually.

There is no magical solution proposed. There is just a little plus for very simple rare factor cases.

In most of cases, you will not care about volumes. End result will just be a mixing of opaque surfaces.

So, implicit mixing of volumes will be about principled shader with SSS and Transmission.

Most of times, you will mix an opaque shader with volume shading similar to an holdout shader with a transmissive shader. So, no mixing. But a pure addition.

Things will get complicated, when you will mix two transmissive shaders.

But it will not be more complicated than with current principled shader.

You will have same options possible, same work to do to make it right.

But in some rare cases, you will have little surprise of having a mixing of surfaces doing the job for volumes, too, being good enough.

80 posts were split to a new topic: A Cycles discussion that started with MaterialX, then became about open source standards and color

Do viewport renders seem faster and less accurate in today’s build - d136a996caa9 ?

Lukas Stockner’s latest update is really exciting, improving metallic materials changing the fresnel algorithm and basing it on Adobe’s F82 model, which they use in their own standard material shader. There are more details in the project discussion.

I really feel that this work will address many of the complaints about Blender’s perceived render quality.

Is that build already on latest (today) v3.3a build?

No it’s not in master yet, but the build is here:

Attendees

- Brecht Van Lommel (Blender)

- Christophe Hery (Meta)

- Brian Savery (AMD)

- Nikita Sirgienko (Intel)

- Patrick Mours (NVIDIA)

- Jeffrey Liu

Notes

- Intel OneAPI: support for this landed in Blender 3.3. For anyone with an Intel Arc GPU, feedback is welcome. The main remaining issue for the 3.3 release is ahead-of-time compilation.

- AMD HIP: the main issue right now is the texture resolution bug on Vega/RDNA, we are hoping it will be fixed in ROCm 5.3 but this is not clear yet.

- Apple Metal: two optimizations landed in the past few weeks, for shader sorting and intersection kernel specialization.

- Path guiding: this was submitted for code review, currently waiting for a new OpenPGL release and updated patch. There will also be a meeting soon about integrating MNEE and path guiding.

- Spectral: various patches from Andrii Symkin are landing to refactor code for this. No actual functionality yet, but important groundwork.

- Principled v2: Lukas published a branch with an early (incomplete) version, there is a feedback topic with active discussion.

- Sampling pattern: Lukas made a patch with an improved scrambling method, but more work is needed to optimize it and integrate well.

- OptiX temporal denoise: Patrick mentions he started working on making this available from the UI.

- Many Lights: support for surfaces is getting more complete and many issues were fixed (weekly reports, feedback), but still more work needed. From discussion, it appears that adaptive splitting may be important to handle cases where the heuristic is not ideal. Adapting splitting combined with importance resampling to keep just 1 light ray for easy GPU support will be tested.

Still waiting for light linking here ![]()

OK, so the first thing we need to be clear about is it the actual download install file or the size of the installed folder on ones hard drive.

If its the download, Blender 3.2.1 for Windows is currently 213MB, so even at 30% more it would be around 277MB. Granted that’s a bit more, but in the total scheme of the Internet now days, it really isn’t all that much and I speak as someone with a 100GB monthly data limit.

I think that’s really blowing things somewhat out of proportion. We are talking the Cycles core, there’s a good chance it doesn’t even load in at start-up and if you then only use Eevee it may not load at all. So load time and RAM usage is still way way low.

Basic SATA SSD’s are dirt cheap now days for 512GB or less, the CPU/RAM/Motherboard/PSU/GPU and very likely even the case will all individually cost more, so at this stage we just don’t even have a PC, so running Blender is a moot point.

Also, lets look at the irony about all this. On one hand we have some threads and various posts about how people want Cycles to render at ‘better quality’ and here we are talking about an addition to Cycles that will do just that but it’s all too hard because it may make Blender use a little more disk space or a few MB’s more RAM… guess they should stop all work on Geometry Nodes (can’t add any more nodes) or Texture Painting or Sculpting, surely that’s going to make Blender load slower…

16 posts were split to a new topic: 7-Zip as a more efficient alternative to Zip for compressing Blender builds

Hi guys, I’ve split the extensive spectral Cycles subdiscussion from this thread to the Cycles Spectral Rendering thread. Please feel free to continue the spectral Cycles discussion there, thanks.

While you’re at it, can you pull the 7zip conversation above out as well?

What forum category? It’s not anywhere I typically visit. Following the link takes me directly to the thread, but I’m not seeing where this thread exist.

It’s under artwork > blender tests. The posts were appended to an old spectral rendering test thread.

Done.

Ok, thanks.